Modern Bayesian Tools for Time Series Analysis

Table of Contents

- 1. Disclaimer

- 2. Set up

- 3. State Space Modeling

- 3.1. Mean-only model, Normal shocks (vectorized)

- 3.2. Mean-only model, Normal shocks (

forloop) - 3.3. Mean-only model, Normal shocks, generate forecast from model block

- 3.4. Mean-only model, Normal shocks, generate forecast from

generated quantities - 3.5. Mean-only model, Normal shocks, generate forecast from

generated quantitieswith Monte Carlo simulation - 3.6. Mean-varying model, Normal shocks (a.k.a. “local-level model”, “random walk plus noise”)

- 3.7. Mean-varying model, Normal shocks (a.k.a. “local-level model”, “random walk plus noise”), predict ahead

- 3.8. Mean-varying, linear-trend model, Normal shocks, predict ahead (a.k.a. “local linear-trend model”)

- 3.9. Mean-varying with seasonal, Normal shocks (a.k.a. “local model with stochastic seasonal”)

- 4. Autoregressive models, unit roots and cointegration

- 5. On exit: clean up

1 Disclaimer

1.1 Please read the following Disclaimer carefully

Thomas P. Harte and R. Michael Weylandt (“the Authors”) are providing this presentation and its contents (“the Content”) for educational purposes only at the R in Finance Conference, 2016-05-20, Chicago, IL. Neither of the Authors is a registered investment advisor and neither purports to offer investment advice nor business advice.

You may use any of the Content under the terms of the MIT License (see below).

The Content is provided for informational and educational purposes only and should not be construed as investment or business advice. Accordingly, you should not rely on the Content in making any investment or business decision. Rather, you should use the Content only as a starting point for doing additional independent research in order to allow you to form your own opinion regarding investment or business decisions. You are encouraged to seek independent advice from a competent professional person if you require legal, financial, tax or other expert assistance.

The Content may contain factual or typographical errors: the Content should in no way be construed as a replacement for qualified, professional advice. There is no guarantee that use of the Content will be profitable. Equally, there is no guarantee that use of the Content will not result in losses.

THE AUTHORS SPECIFICALLY DISCLAIM ANY PERSONAL LIABILITY, LOSS OR RISK INCURRED AS A CONSEQUENCE OF THE USE AND APPLICATION, EITHER DIRECTLY OR INDIRECTLY, OF THE CONTENT. THE AUTHORS SPECIFICALLY DISCLAIM ANY REPRESENTATION, WHETHER EXPLICIT OR IMPLIED, THAT APPLYING THE CONTENT WILL LEAD TO SIMILAR RESULTS IN A BUSINESS SETTING. THE RESULTS PRESENTED IN THE CONTENT ARE NOT NECESSARILY TYPICAL AND SHOULD NOT DETERMINE EXPECTATIONS OF FINANCIAL OR BUSINESS RESULTS.

1.2 MIT License

The MIT License:

Copyright (c) 2016 Thomas P. Harte & R. Michael Weylandt (see below). Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

2 Set up

source("time_series_functions.R") .init.proj() ## we'll always use the same seed: `Random seed :: Modern Bayesian Tools for Time Series Analysis, R in Finance 2016`<- function() { as.integer(as.Date("2016-05-20")) } `get_seed`<- `Random seed :: Modern Bayesian Tools for Time Series Analysis, R in Finance 2016` tabs<- read_time_series_data()

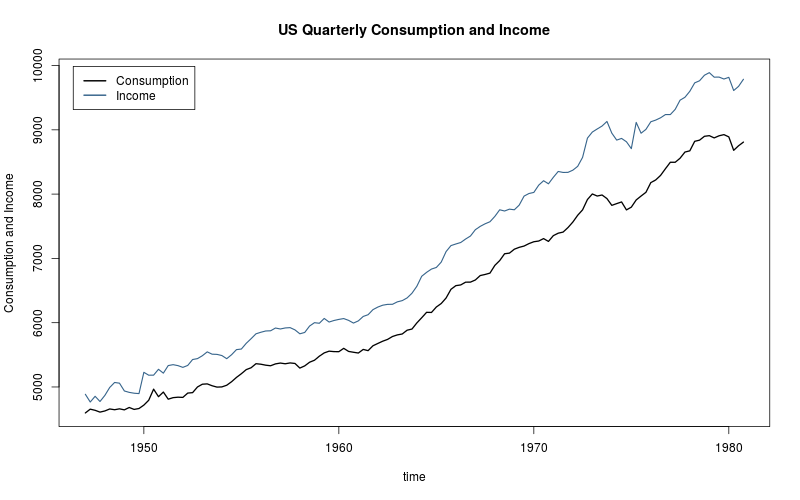

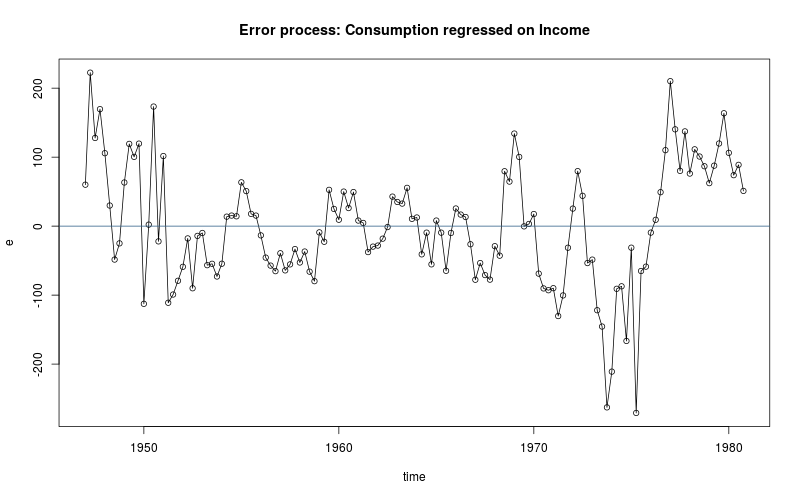

2.1 Data sourced

Most of the the data accompany the text:

“Introduction to Time Series Analysis and Forecasting” (2008) Montgomery, D. C., Jennings C. L. and Kulahci, M., John Wiley & Sons, Inc., Hoboken, NJ.

and can be downloaded from the web from Wiley’s ftp site.

2.2 Plot time series

2.2.1 Plot

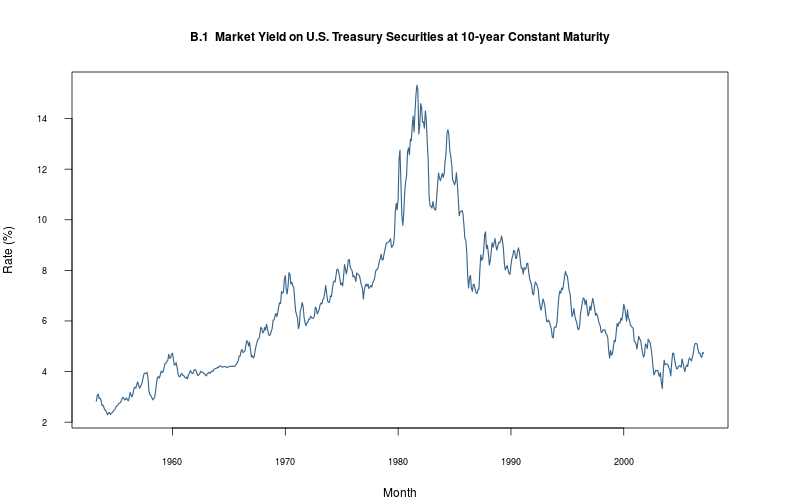

plot_ts(sheet="B.1-10YTCM", fmla=`Rate (%)` ~ `Month`, type="l")

2.2.2 Plot

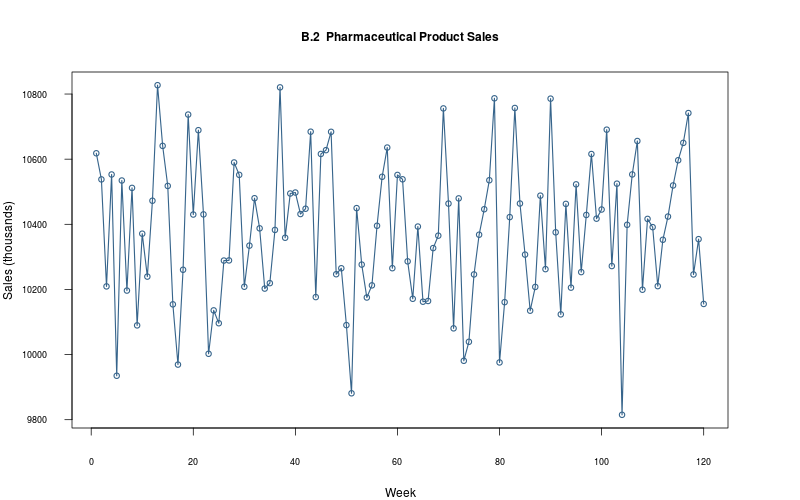

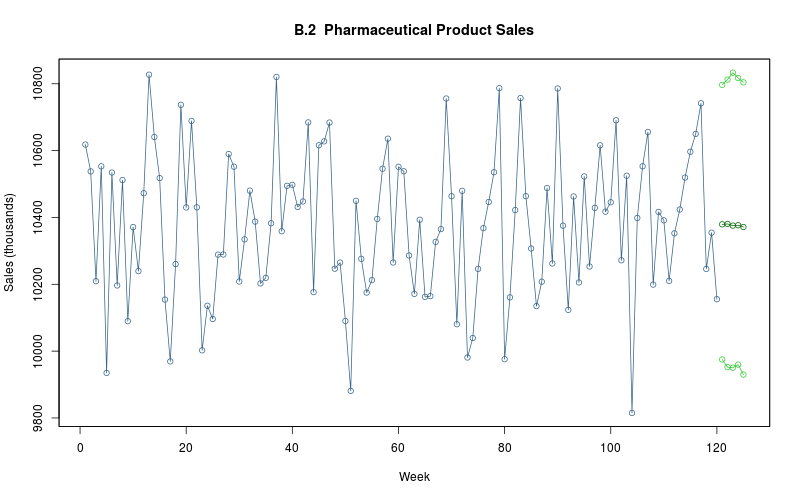

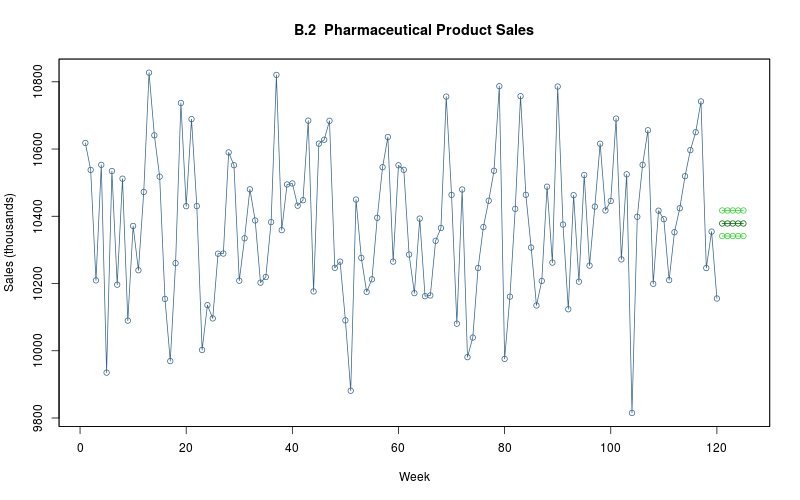

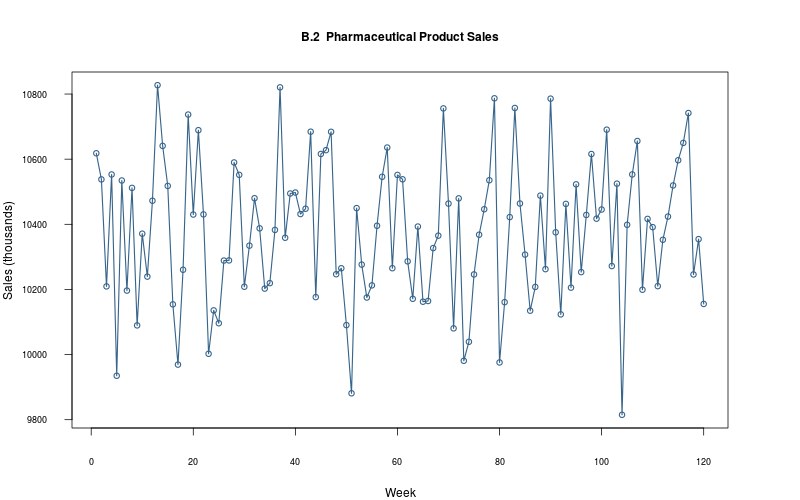

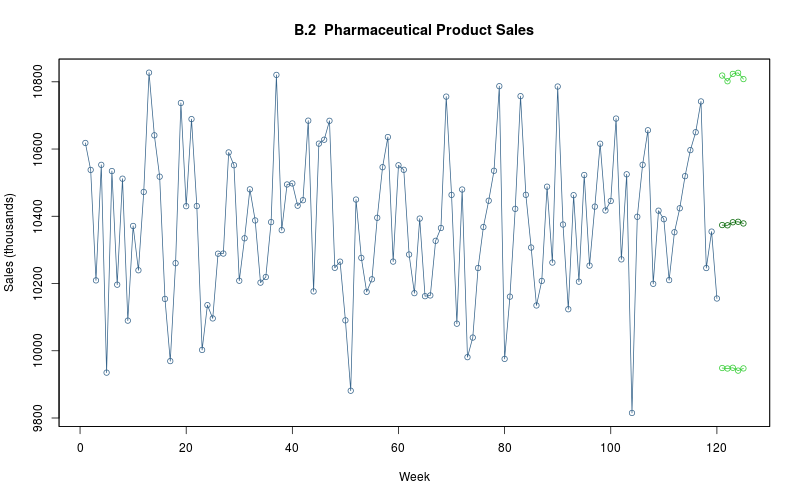

plot_ts(sheet="B.2-PHAR", fmla=`Sales (thousands)` ~ `Week`)

2.2.3 Plot

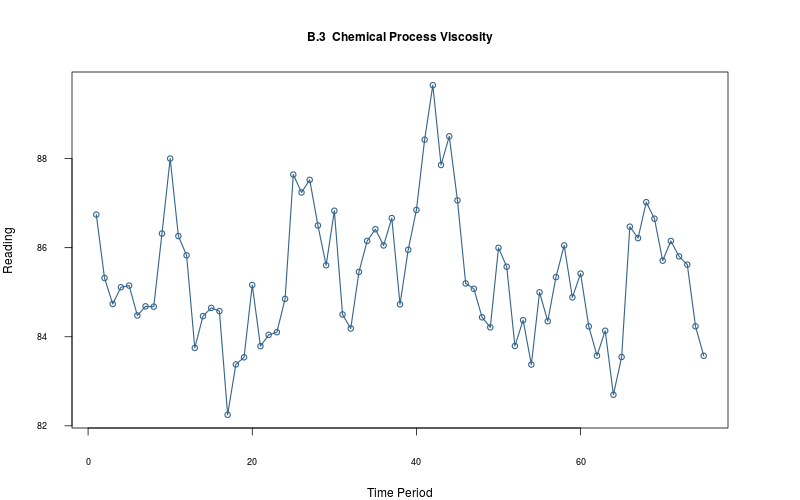

plot_ts(sheet="B.3-VISC", fmla=`Reading` ~ `Time Period`)

2.2.4 Plot

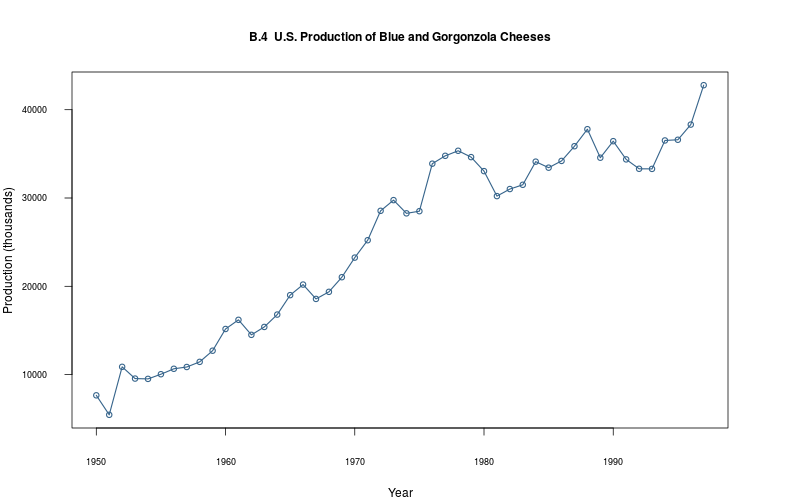

plot_ts(sheet="B.4-BLUE", fmla=`Production (thousands)` ~ `Year`)

2.2.5 Plot

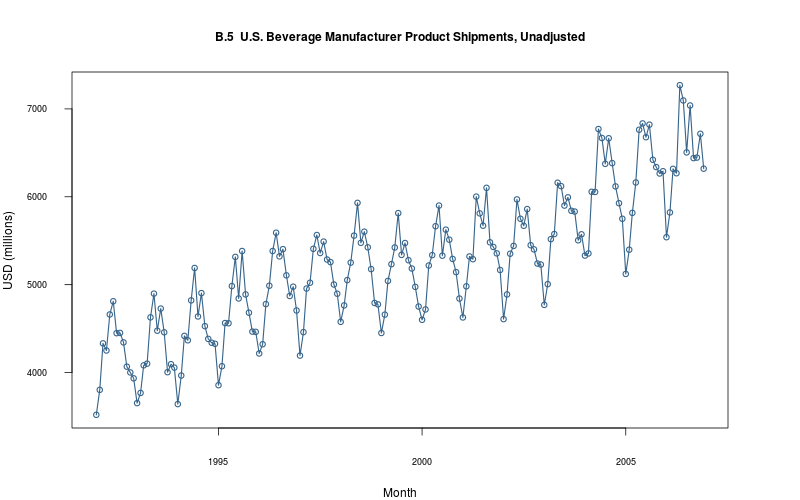

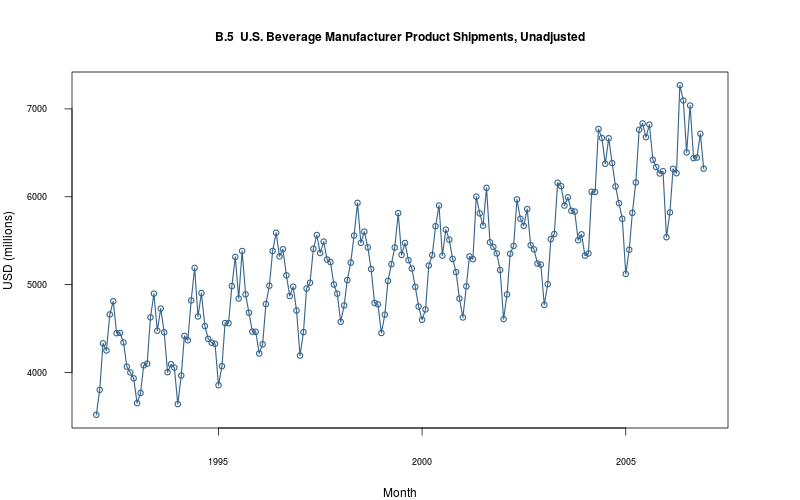

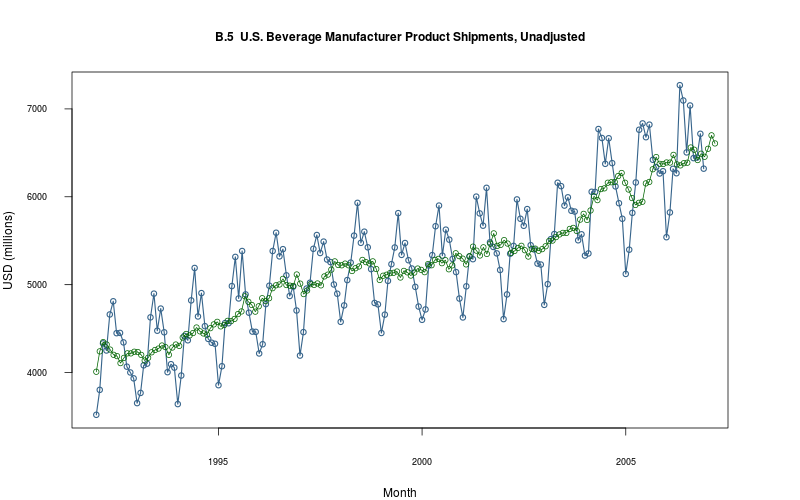

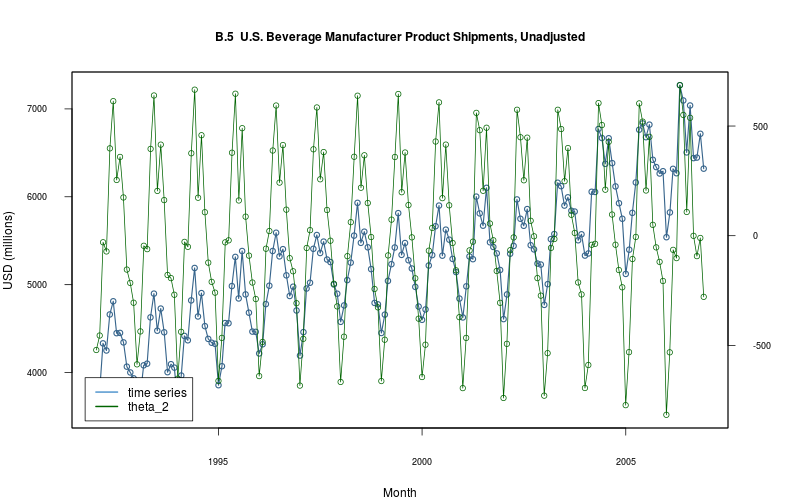

plot_ts(sheet="B.5-BEV", fmla=`USD (millions)` ~ `Month`)

2.2.6 Plot

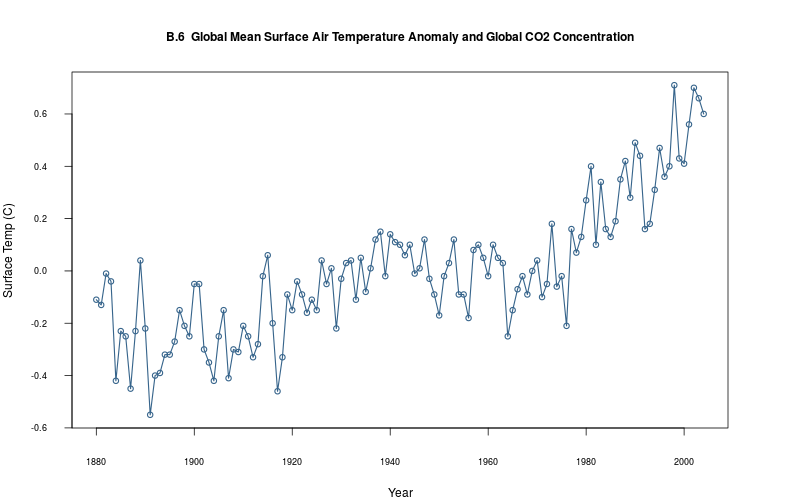

plot_ts(sheet="B.6-GSAT-CO2", fmla=`Surface Temp (C)` ~ `Year`)

2.2.7 Plot

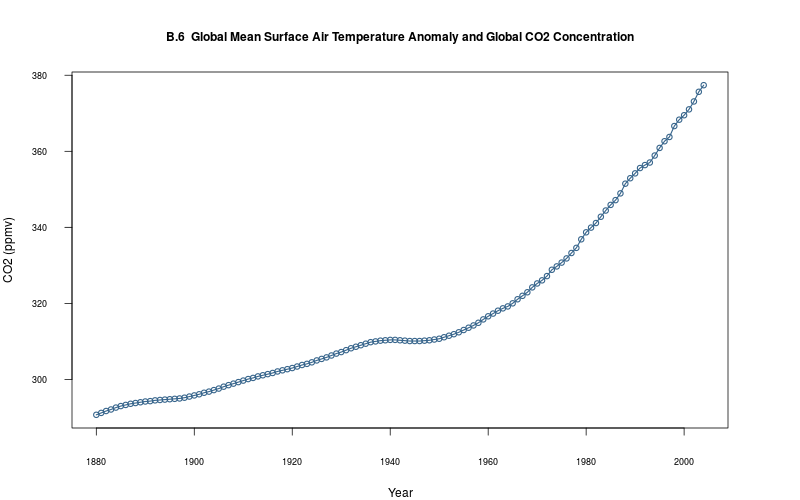

plot_ts(sheet="B.6-GSAT-CO2", fmla=`CO2 (ppmv)` ~ `Year`)

2.2.8 Plot

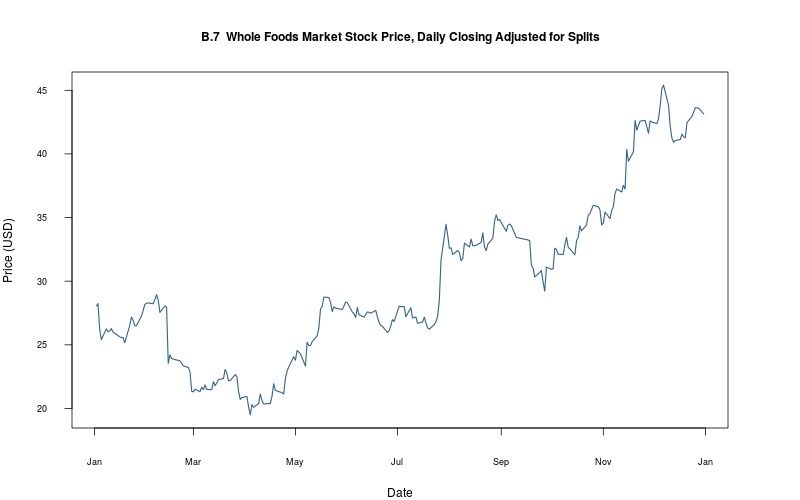

plot_ts(sheet="B.7-WFMI", fmla=`Price (USD)` ~ `Date`, type="l")

2.2.9 Plot

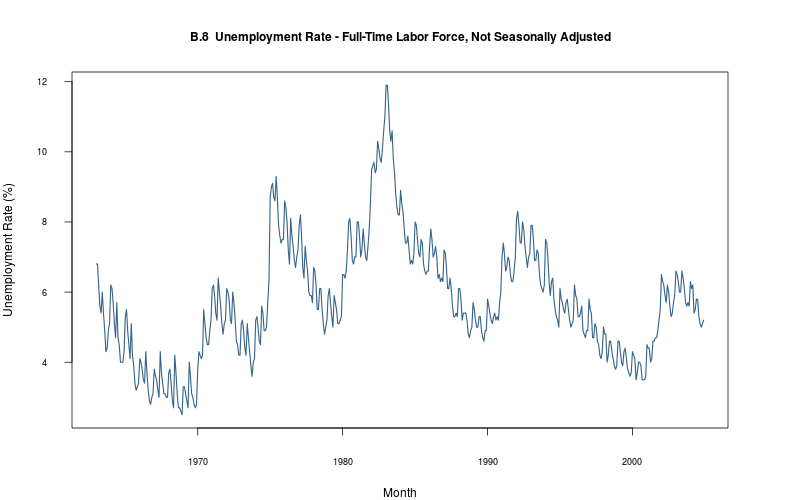

plot_ts(sheet="B.8-UNEMP", fmla=`Unemployment Rate (%)` ~ `Month`, type="l")

2.2.10 Plot

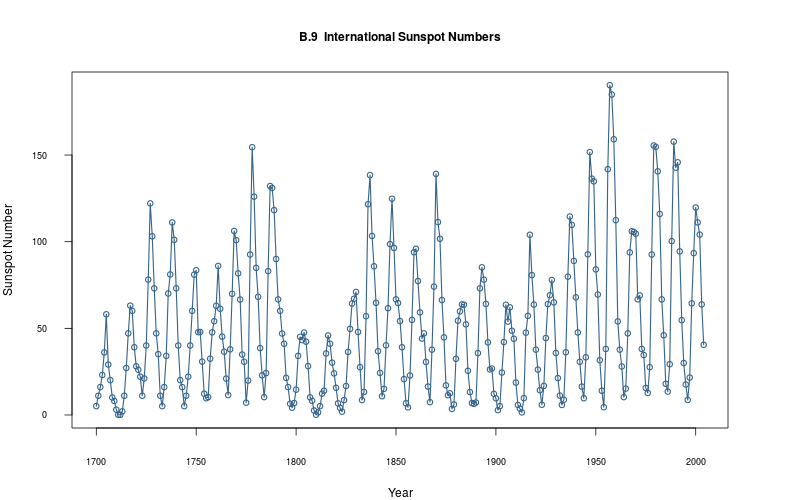

plot_ts(sheet="B.9-SUNSPOT", fmla=`Sunspot Number` ~ `Year`)

2.2.11 Plot

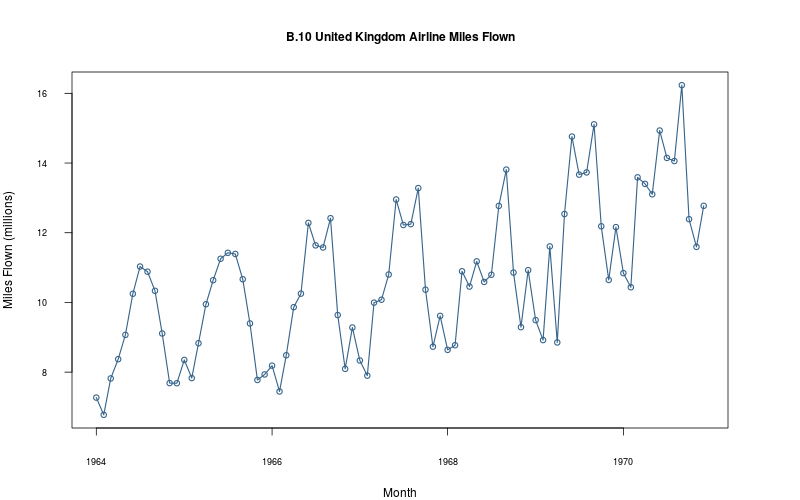

plot_ts(sheet="B.10-FLOWN", fmla=`Miles Flown (millions)` ~ `Month`)

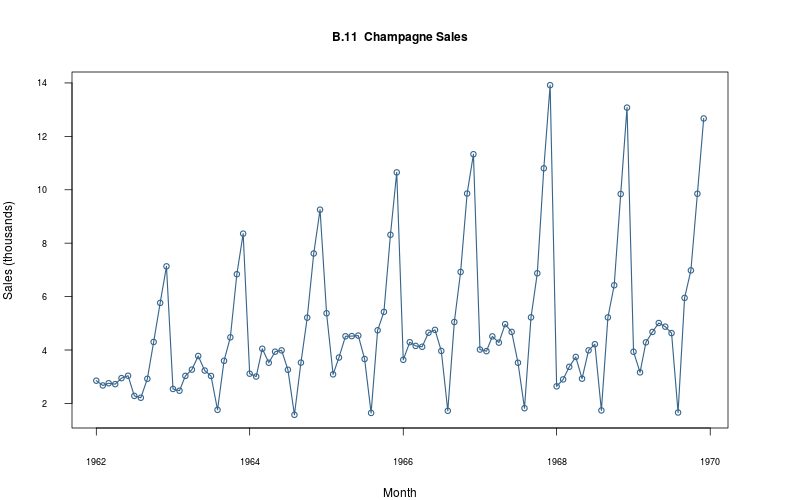

2.2.12 Plot

plot_ts(sheet="B.11-CHAMP", fmla=`Sales (thousands)` ~ `Month`)

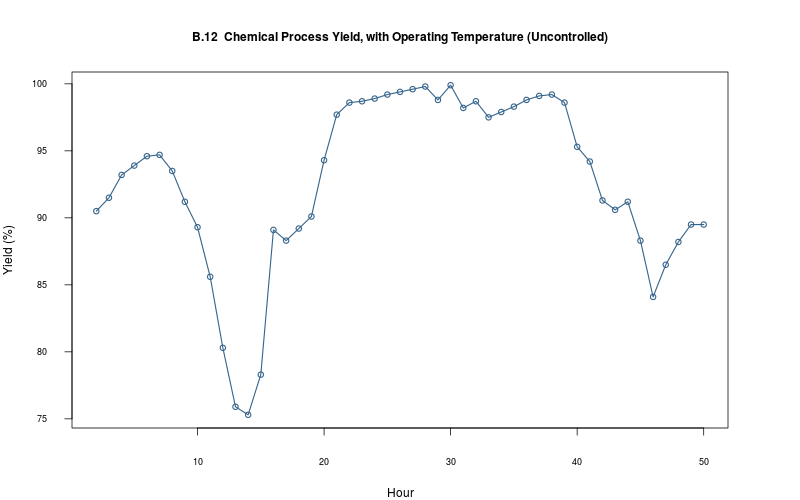

2.2.13 Plot

plot_ts(sheet="B.12-YIELD", fmla=`Yield (%)` ~ `Hour`)

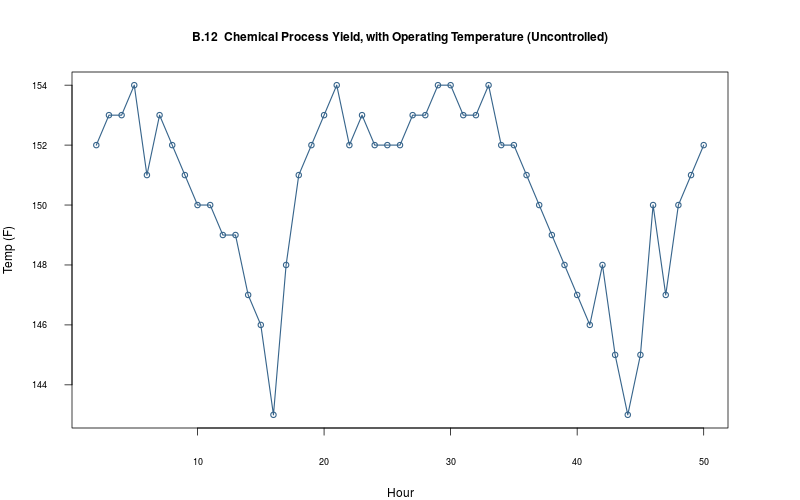

2.2.14 Plot

plot_ts(sheet="B.12-YIELD", fmla=`Temp (F)` ~ `Hour`)

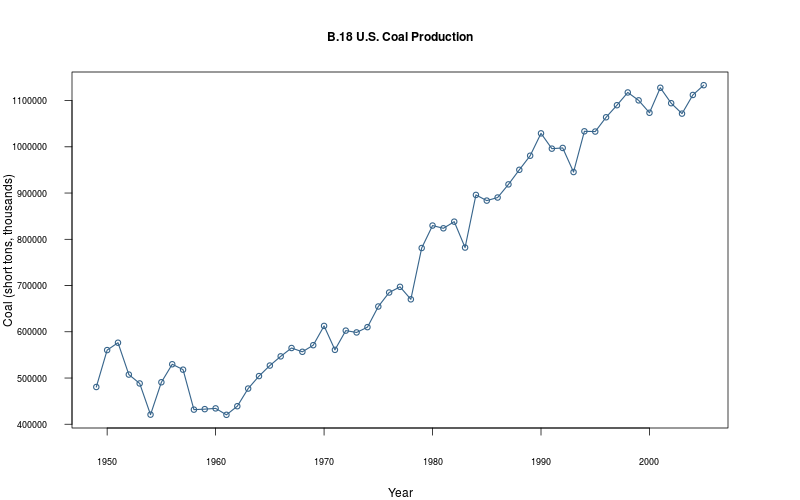

2.2.15 Plot

plot_ts(sheet="B.18-COAL", fmla=`Coal (short tons, thousands)` ~ `Year`)

2.2.16 Plot

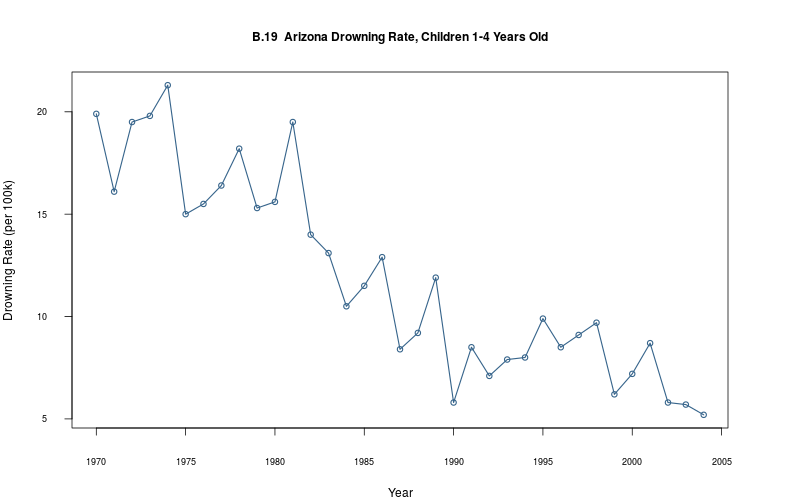

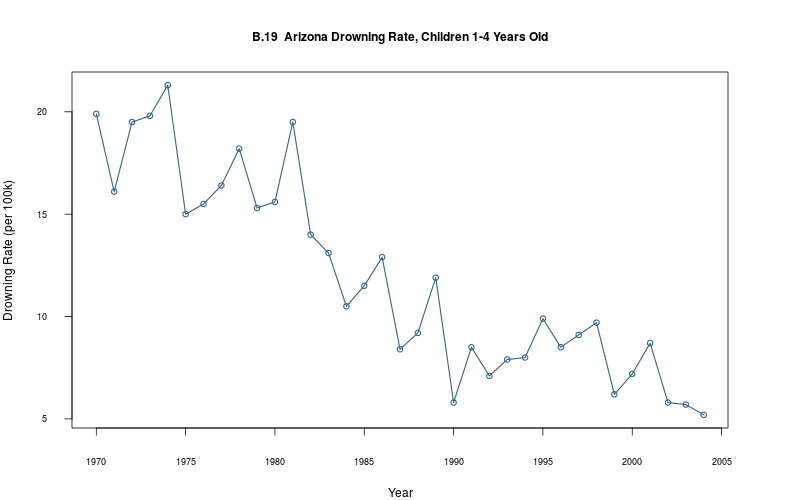

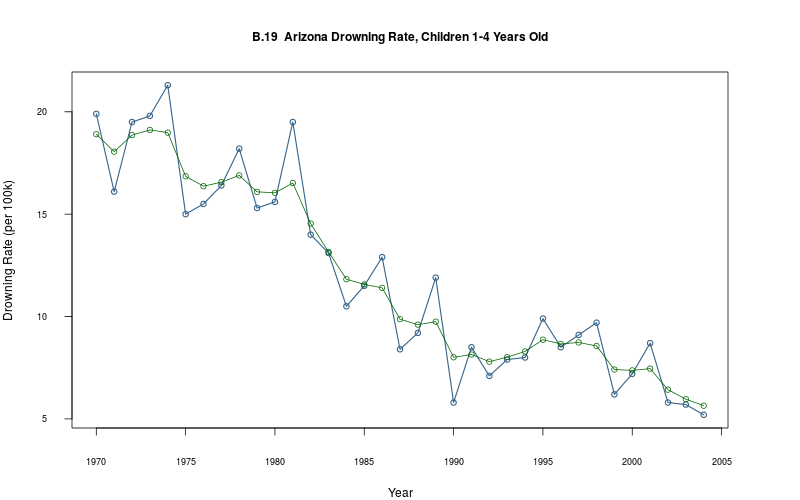

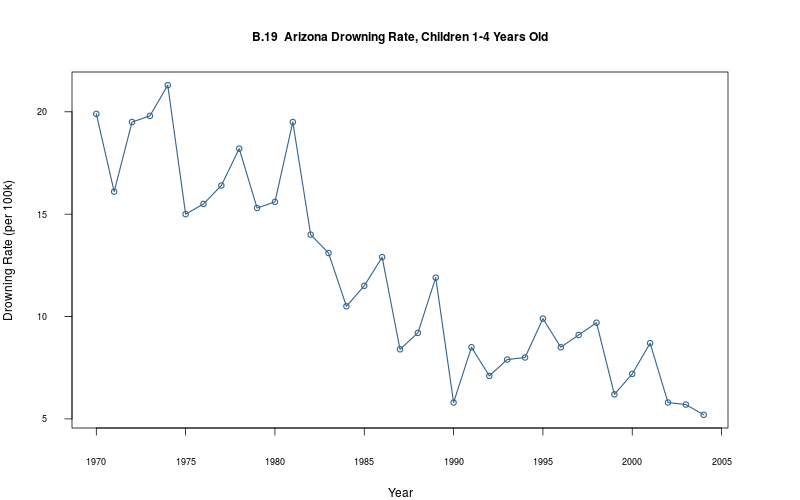

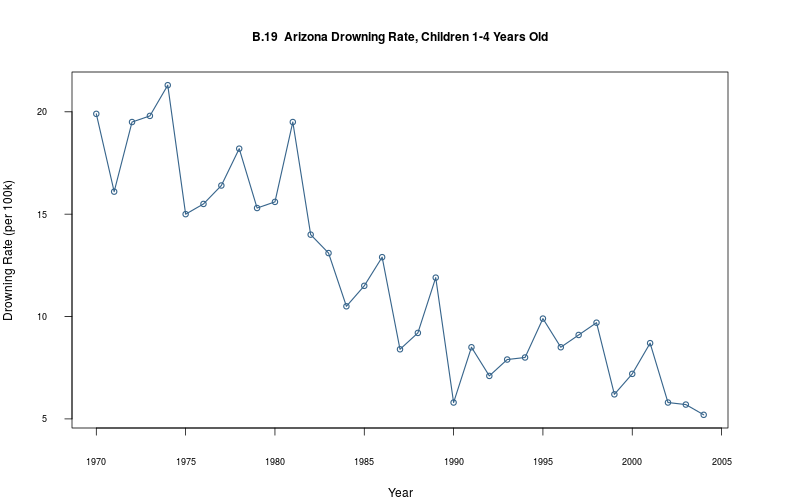

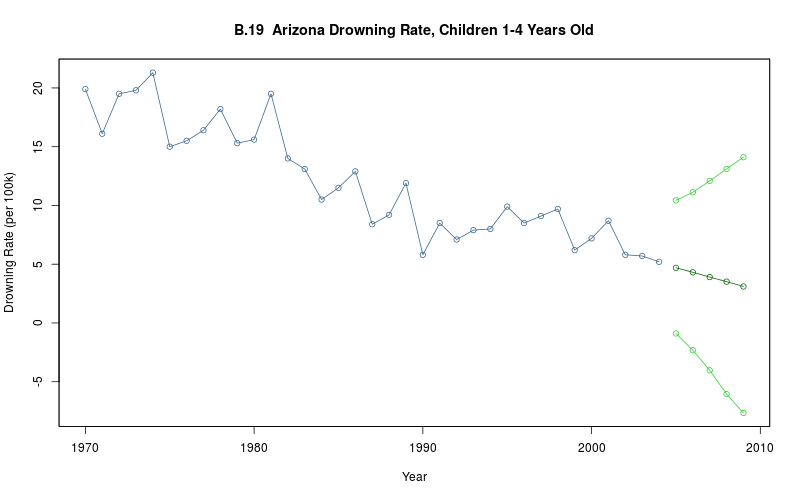

plot_ts(sheet="B.19-DROWN", fmla=`Drowning Rate (per 100k)` ~ `Year`)

This is a particularly interesting, albeit macabre, short time series. I could not locate the original report (or reports) for the time period in question (1970–2004), but did find one on the Arizona Department of Health Services web site (the AZDHS publishes such tables regularly, e.g. these tables are in the period 2001–2011).

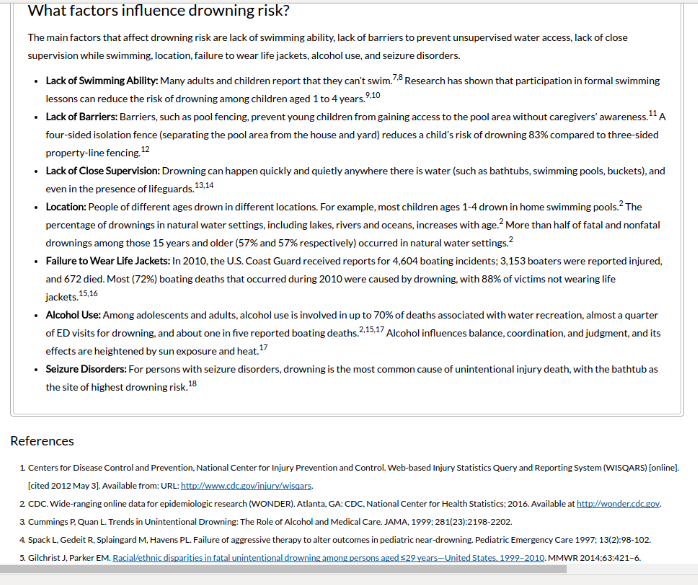

Regrettably, I failed to find an explanation for the precipitous fall in deaths in the 1970–2004 period either in the book or on the AZDHS site. It would interesting to compare to mortality tables in other states. The CDC has some salient information:

3 State Space Modeling

3.1 Mean-only model, Normal shocks (vectorized)

3.1.1 Create model

model<- make_model( sheet="B.2-PHAR", model="mean-only_normal_vectorized", force=FALSE )

3.1.2 Closure: capture a model

make_model

function(

sheet,

model,

response=NULL,

T_new=NULL,

seasonal=NULL,

force=FALSE,

iter=2000,

n.chains=3

) {

.binfilename<-

sprintf(

"stan/%s_%s%s_%d_s%d.rds",

model,

sheet,

ifelse(is.null(response), "", paste0("_", response, sep="")),

ifelse(is.null(T_new), 0, T_new),

ifelse(is.null(seasonal), 0, seasonal)

)

if (!file.exists(.binfilename) | force==TRUE) {

## construct the environment

this<- new.env()

.sheet<- sheet

.model<- model

.response<- response

.T_new<- T_new

.Lambda<- seasonal

.chains<- n.chains

.iter<- iter

rm(

sheet,

model,

response,

T_new,

n.chains,

iter

)

bind_to_env(get_seed, env=this)

.data<- get_data_block(

.sheet,

response=.response,

model.name=.model,

T_new=.T_new,

Lambda=.Lambda

)

.model.file<- get_model_filename(.model)

.fit<-

get_stanfit(

.data,

model=.model,

.iter=.iter,

.chains=.chains

)

`binfilename`<- function() {

.binfilename

}

`sheet`<- function() {

.sheet

}

`model`<- function() {

.model

}

`model_filename`<- function() {

.model.file

}

`show_model_file`<- function() {

cat(paste(readLines(.model.file)), sep = '\n')

invisible()

}

`y`<- function() {

.data[["y"]]

}

`T`<- function() {

.data[["T"]]

}

`T_new`<- function() {

.data[["T_new"]]

}

`T_end`<- function() {

if (is.null(T_new())) return(T())

T() + T_new()

}

`fit`<- function() {

.fit

}

`extract_par`<- function(par, start, end) {

assert(any(grepl(par, names(this$fit()))))

theta<- rstan::extract(this$fit())[[par]]

if (is.na(dim(theta)[2]))

theta<- matrix(theta, nc=1)

theta_mean<- apply(theta, 2, mean)

theta_quantiles<- apply(theta, 2, quantile, c(.0275,.975))

time_index<- get_t(this$sheet(), start=start, end=end)

out<- time_index %>%

mutate(

theta_mean = theta_mean,

theta_lower = theta_quantiles["2.75%", ],

theta_upper = theta_quantiles["97.5%", ]

)

colnames(out)<- gsub("theta", par, colnames(out))

out

}

`plot_y_tilde`<- function() {

y_tilde<- extract_par(

"y_tilde",

start = this$T() + 1,

end = this$T_end()

)

y_comb<- bind_rows(

tabs[[this$sheet()]],

y_tilde

)

z<- zoo(

y_comb[, setdiff(colnames(y_comb), get_t_name(this$sheet()))] %>% as.matrix,

order.by=y_comb[, get_t_name(this$sheet())] %>% as.matrix

)

plot(

z[, 1:2],

col=c("steelblue4", "darkgreen"),

screen=1,

main=gsub("Table ", "", get_name(this$sheet())),

xlab=get_t_name(this$sheet()),

ylab=colnames(tabs[[this$sheet()]])[2],

ylim=c(min(z, na.rm=TRUE), max(z, na.rm=TRUE)),

type="o"

)

lines(z[, 3], col="limegreen", type="o")

lines(z[, 4], col="limegreen", type="o")

}

bind_to_env(`binfilename`, env=this)

bind_to_env(`sheet`, env=this)

bind_to_env(`model`, env=this)

bind_to_env(`model_filename`, env=this)

bind_to_env(`show_model_file`, env=this)

bind_to_env(`y`, env=this)

bind_to_env(`T`, env=this)

bind_to_env(`T_new`, env=this)

bind_to_env(`T_end`, env=this)

bind_to_env(`fit`, env=this)

bind_to_env(`extract_par`, env=this)

bind_to_env(`plot_y_tilde`, env=this)

class(this)<- "model"

## assert(fully_converged(fit))

saveRDS(this, file=.binfilename)

}

else {

this<- readRDS(.binfilename)

assert(this$binfilename()==.binfilename)

}

this

}

3.1.3 What’s in your model?

as.matrix(vapply(objects(env=model), function(x) class(get(x, env=model)), character(1)))

[,1]

binfilename "function"

extract_par "function"

fit "function"

get_seed "function"

model "function"

model_filename "function"

plot_y_tilde "function"

sheet "function"

show_model_file "function"

T "function"

T_end "function"

T_new "function"

y "function"

3.1.4 Show Stan code

We use vectorization for this particular example because it has a nigh isomorphism to the Stan code (and vectorization affords an optimization in the Stan model):

model$show_model_file()

// ---------------------------------------------------------------------

// model name: mean-only, normal [vectorized: no for loop over index t]

data {

int<lower=1> T;

real y[T];

}

parameters {

real theta;

real<lower=0> sigma;

}

model {

y ~ normal(theta, sigma);

}

cf. the model itself:

\begin{equation} y_t \sim \mathscr{N}(\theta, \sigma^2) \end{equation}3.1.5 Fitted Stan model

model$fit()

Inference for Stan model: mean-only_normal_vectorized.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10378.67 0.47 20.24 10338.88 10365.08 10378.85 10392.43 10418.39 1838 1

sigma 219.32 0.37 14.61 192.21 209.33 218.41 228.38 249.52 1573 1

lp__ -700.97 0.04 1.05 -703.81 -701.35 -700.64 -700.23 -699.97 794 1

Samples were drawn using NUTS(diag_e) at Mon May 30 23:40:31 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

3.1.6 Assess fit

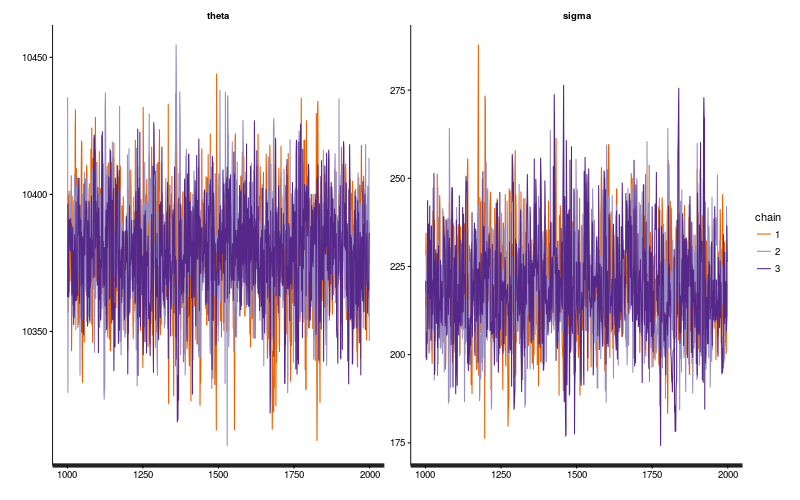

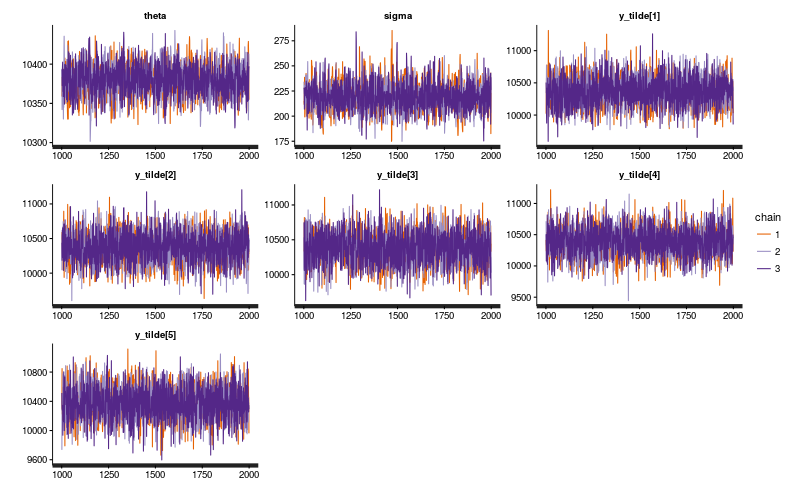

traceplot(model$fit(), inc_warmup=FALSE)

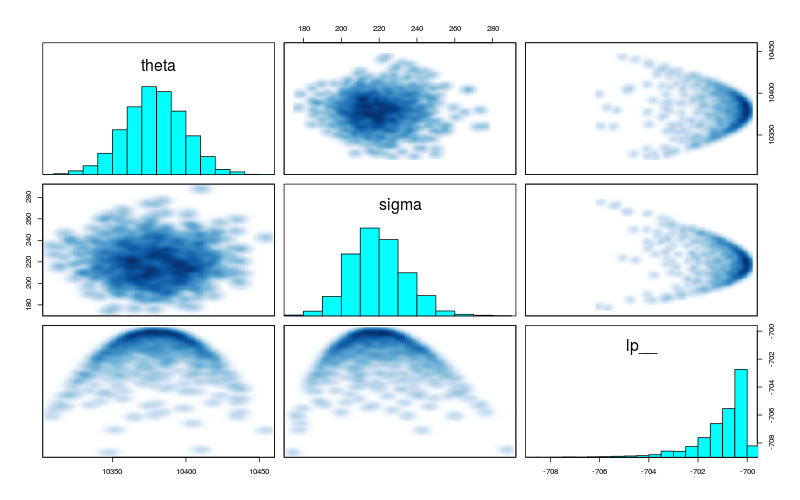

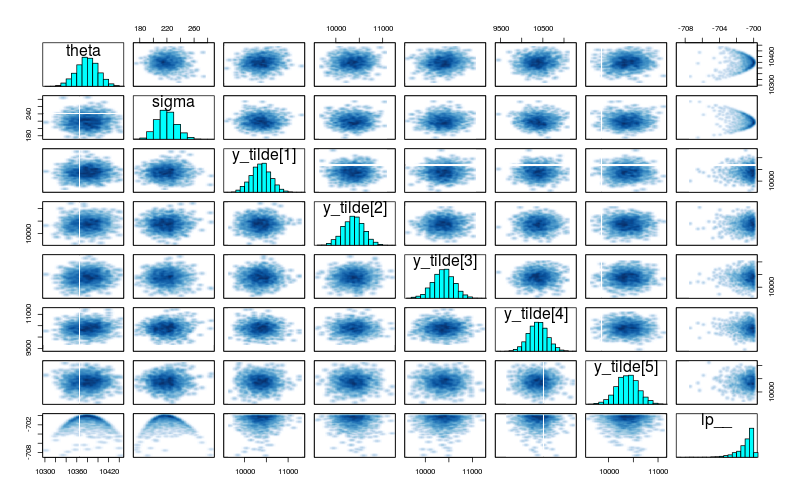

pairs(model$fit())

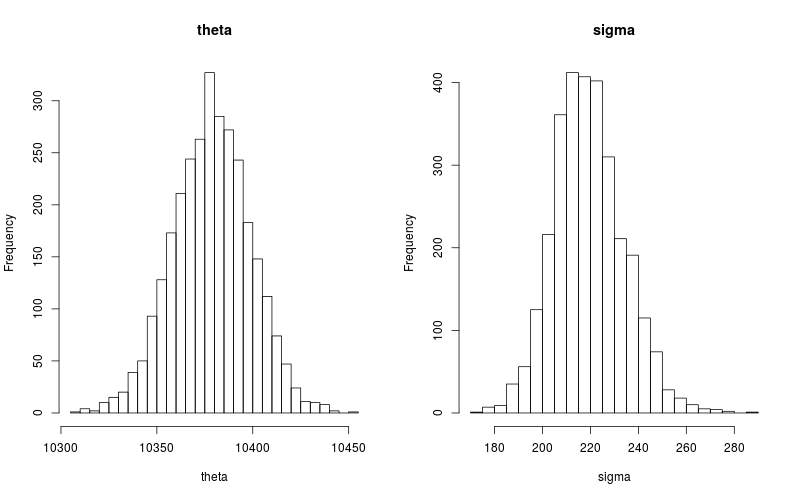

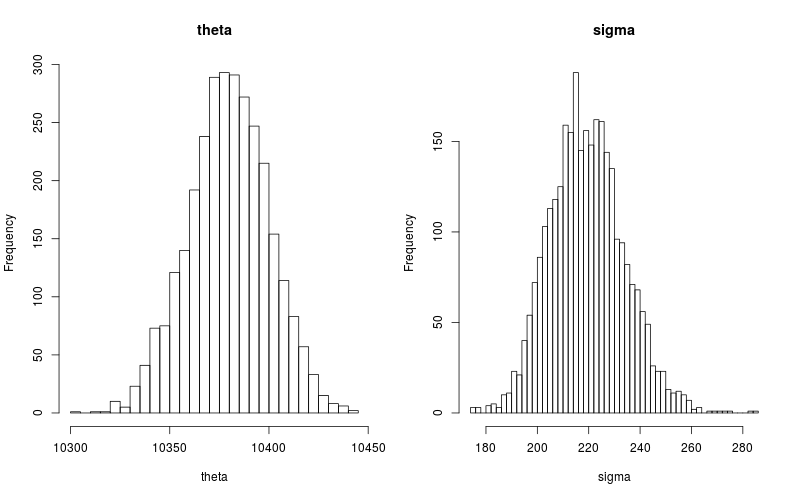

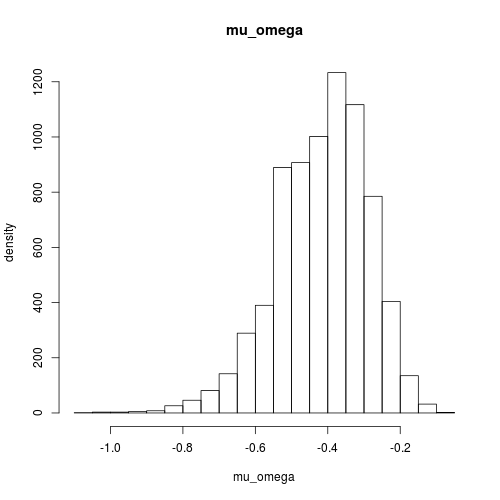

par(mfrow=c(1,2)) hist(extract(model$fit())[["theta"]], breaks=30, main="theta", xlab="theta") hist(extract(model$fit())[["sigma"]], breaks=30, main="sigma", xlab="sigma")

3.1.7 Simulate from fit (mean-posterior predictive fit)

We can get the posterior mean in one of two ways:

(theta<- get_posterior_mean(model$fit(), par="theta")[, "mean-all chains"]) (sigma<- get_posterior_mean(model$fit(), par="sigma")[, "mean-all chains"])

[1] 10378.67 [1] 219.322

(theta<- mean(extract(model$fit())[["theta"]])) (sigma<- mean(extract(model$fit())[["sigma"]]))

[1] 10378.67 [1] 219.322

and then simulate from the posterior mean (this is a “posterior predictive fit"—not a forecast!):

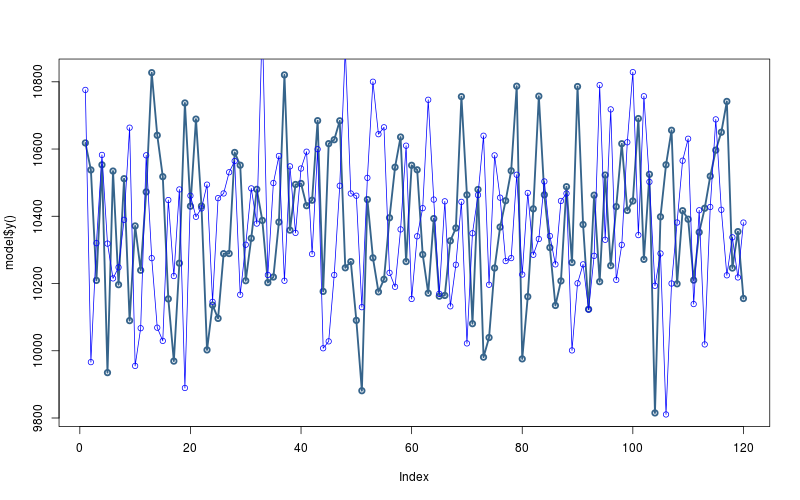

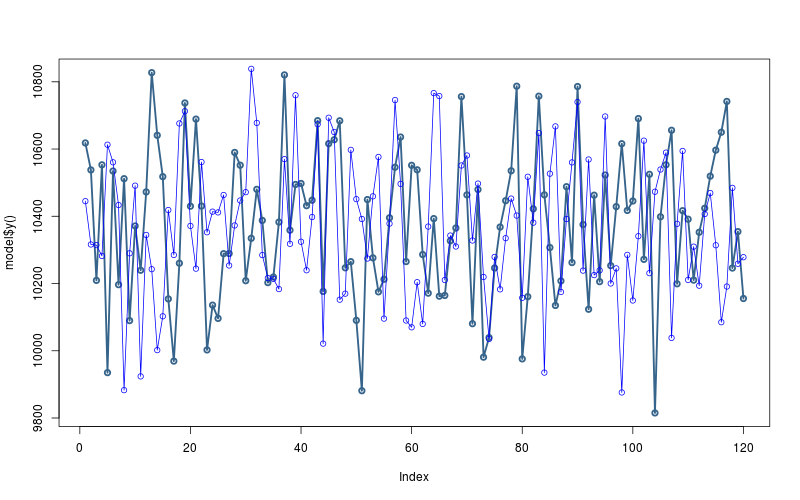

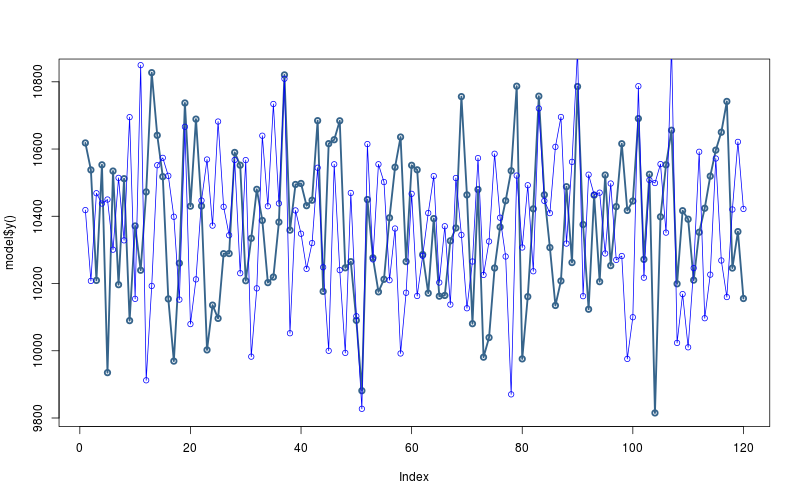

theta<- mean(extract(model$fit())[["theta"]]) sigma<- mean(extract(model$fit())[["sigma"]]) y.sim<- rnorm(model$T(), mean=theta, sd=sigma) plot(model$y(), col="steelblue4", lwd=2.5, type="o") lines(y.sim, type="o", col="blue")

Note that we are incorporating the uncertainty in the posterior estimates by averaging over them and taking a point estimate (the posterior mean). This is not the same as integrating over the posterior density (which is a weighted average of all posterior estimates).

3.2 Mean-only model, Normal shocks (for loop)

3.2.1 Create model

model<- make_model( sheet="B.2-PHAR", model="mean-only_normal", force=FALSE )

3.2.2 Show Stan code

We used vectorization in the previous example

but we prefer to use the for loop because it generalizes to the

remaining examples and is of particular advantage in time-series

models.

We’re not going to worry too much about

optimizing the model set-up—rather, we’ll focus on model use.

model$show_model_file()

// ---------------------------------------------------------------------

// model name: mean-only, normal [uses for loop over index t]

data {

int<lower=1> T;

real y[T];

}

parameters {

real theta;

real<lower=0> sigma;

}

model {

for (t in 1:T) {

y[t] ~ normal(theta, sigma);

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta, \sigma^2) \\[3mm] \Leftrightarrow y_t &=& \theta + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \sigma^2) \end{eqnarray}3.2.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-only_normal.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

sigma 219.66 0.36 14.25 193.86 209.66 218.93 228.58 249.12 1566 1

lp__ -700.91 0.03 0.96 -703.49 -701.31 -700.63 -700.21 -699.96 940 1

Samples were drawn using NUTS(diag_e) at Mon May 30 23:41:05 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

3.2.4 Simulate from fit

theta<- mean(extract(model$fit())[["theta"]]) sigma<- mean(extract(model$fit())[["sigma"]]) y.sim<- rnorm(model$T(), mean=theta, sd=sigma) plot(model$y(), col="steelblue4", lwd=2.5, type="o") lines(y.sim, type="o", col="blue")

3.3 Mean-only model, Normal shocks, generate forecast from model block

3.3.1 Create model

Note: we will generate T_new new data points out of sample,

i.e. we will forecast T_new steps ahead.

model<- make_model( sheet="B.2-PHAR", model="mean-only_normal_predict_new_model_block", T_new=5, force=FALSE )

SAMPLING FOR MODEL 'mean-only_normal_predict_new_model_block' NOW (CHAIN 1).

Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.72597 seconds (Warm-up)

# 0.030108 seconds (Sampling)

# 0.756078 seconds (Total)

#

SAMPLING FOR MODEL 'mean-only_normal_predict_new_model_block' NOW (CHAIN 2).

Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.794401 seconds (Warm-up)

# 0.033499 seconds (Sampling)

# 0.8279 seconds (Total)

#

SAMPLING FOR MODEL 'mean-only_normal_predict_new_model_block' NOW (CHAIN 3).

Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.833951 seconds (Warm-up)

# 0.035478 seconds (Sampling)

# 0.869429 seconds (Total)

#

The following numerical problems occured the indicated number of times after warmup on chain 3

count

Exception thrown at line 19: normal_log: Scale parameter is 0, but must be > 0! 1

When a numerical problem occurs, the Metropolis proposal gets rejected.

However, by design Metropolis proposals sometimes get rejected even when there are no numerical problems.

Thus, if the number in the 'count' column is small, do not ask about this message on stan-users.

3.3.2 Show Stan code

model$show_model_file()

// ---------------------------------------------------------------------

// model name: mean-only, normal [predict T_new in model block]

data {

int<lower=1> T;

real y[T];

int<lower=0> T_new;

}

parameters {

real theta;

real<lower=0> sigma;

vector[T_new] y_tilde;

}

model {

for (t in 1:T) {

y[t] ~ normal(theta, sigma);

}

for (t in 1:T_new) {

y_tilde[t] ~ normal(theta, sigma);

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta, \sigma^2) \\[3mm] \Leftrightarrow y_t &=& \theta + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \sigma^2) \end{eqnarray}3.3.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-only_normal_predict_new_model_block.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10379.48 0.40 20.01 10340.25 10365.92 10379.11 10392.10 10419.46 2503 1.00

sigma 219.72 0.29 14.35 193.27 209.61 219.12 229.23 249.23 2385 1.00

y_tilde[1] 10379.42 4.15 211.31 9968.39 10234.45 10385.18 10524.61 10796.74 2595 1.00

y_tilde[2] 10380.57 4.70 222.01 9938.44 10232.95 10382.46 10525.15 10812.51 2230 1.00

y_tilde[3] 10375.66 4.54 230.59 9940.10 10220.16 10370.44 10528.45 10833.30 2581 1.00

y_tilde[4] 10376.72 4.56 226.31 9955.13 10226.05 10372.99 10525.91 10817.21 2462 1.00

y_tilde[5] 10371.47 4.35 220.84 9920.32 10228.42 10374.29 10522.11 10804.19 2577 1.00

lp__ -730.40 0.06 1.95 -735.08 -731.46 -730.08 -729.01 -727.65 1102 1.01

Samples were drawn using NUTS(diag_e) at Mon May 30 23:57:39 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

3.3.4 Forecast

model$plot_y_tilde()

3.4 Mean-only model, Normal shocks, generate forecast from generated quantities

3.4.1 Create model

model<- make_model( sheet="B.2-PHAR", model="mean-only_normal_predict_new_generated_quantities", T_new=5, force=FALSE )

SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities' NOW (CHAIN 1). Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.218478 seconds (Warm-up) # 0.022934 seconds (Sampling) # 0.241412 seconds (Total) # SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities' NOW (CHAIN 2). Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.236686 seconds (Warm-up) # 0.019834 seconds (Sampling) # 0.25652 seconds (Total) # SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities' NOW (CHAIN 3). Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.270393 seconds (Warm-up) # 0.022084 seconds (Sampling) # 0.292477 seconds (Total) #

3.4.2 Show Stan code

model$show_model_file()

// ---------------------------------------------------------------------

// model name: mean-only, normal [predicts T_new values in y_tilde]

data {

int<lower=1> T;

real y[T];

int<lower=0> T_new;

}

parameters {

real theta;

real<lower=0> sigma;

}

model {

for (t in 1:T) {

y[t] ~ normal(theta, sigma);

}

}

generated quantities {

vector[T_new] y_tilde;

for (t in 1:T_new) {

y_tilde[t]<- theta;

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta, \sigma^2) \\[3mm] \Leftrightarrow y_t &=& \theta + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \sigma^2) \end{eqnarray}3.4.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-only_normal_predict_new_generated_quantities.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

sigma 219.66 0.36 14.25 193.86 209.66 218.93 228.58 249.12 1566 1

y_tilde[1] 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

y_tilde[2] 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

y_tilde[3] 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

y_tilde[4] 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

y_tilde[5] 10378.52 0.44 19.70 10340.91 10364.80 10378.21 10391.80 10417.24 1998 1

lp__ -700.91 0.03 0.96 -703.49 -701.31 -700.63 -700.21 -699.96 940 1

Samples were drawn using NUTS(diag_e) at Mon May 30 23:58:12 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

3.4.4 Forecast

model$plot_y_tilde()

3.5 Mean-only model, Normal shocks, generate forecast from generated quantities with Monte Carlo simulation

3.5.1 Create model

model<- make_model( sheet="B.2-PHAR", model="mean-only_normal_predict_new_generated_quantities_mc", T_new=5, force=FALSE )

SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities_mc' NOW (CHAIN 1). Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.273756 seconds (Warm-up) # 0.025425 seconds (Sampling) # 0.299181 seconds (Total) # SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities_mc' NOW (CHAIN 2). Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.231618 seconds (Warm-up) # 0.022441 seconds (Sampling) # 0.254059 seconds (Total) # SAMPLING FOR MODEL 'mean-only_normal_predict_new_generated_quantities_mc' NOW (CHAIN 3). Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.211353 seconds (Warm-up) # 0.026607 seconds (Sampling) # 0.23796 seconds (Total) #

plot_ts(sheet=model$sheet(), fmla=`Sales (thousands)` ~ `Week`);

3.5.2 Show Stan code

model$show_model_file()

// ---------------------------------------------------------------------

// model name: mean-only, normal [predicts T_new values in y_tilde]

data {

int<lower=1> T;

real y[T];

int<lower=0> T_new;

}

parameters {

real theta;

real<lower=0> sigma;

}

model {

for (t in 1:T) {

y[t] ~ normal(theta, sigma);

}

}

generated quantities {

vector[T_new] y_tilde;

for (t in 1:T_new) {

y_tilde[t]<- normal_rng(theta, sigma);

}

}

cf. the model itself:

\begin{equation} y_t \sim \mathscr{N}(\theta, \sigma^2) \end{equation}3.5.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-only_normal_predict_new_generated_quantities_mc.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10379.71 0.46 20.31 10339.12 10366.58 10379.96 10393.80 10418.62 1950 1

sigma 219.31 0.35 14.46 193.32 209.35 218.74 228.57 249.14 1667 1

y_tilde[1] 10373.81 4.12 225.76 9933.51 10221.13 10375.88 10520.34 10818.54 3000 1

y_tilde[2] 10373.10 3.99 218.41 9942.35 10221.92 10377.23 10523.32 10801.63 3000 1

y_tilde[3] 10382.18 4.21 223.86 9940.05 10234.29 10384.17 10529.67 10823.47 2823 1

y_tilde[4] 10383.65 4.08 223.68 9931.30 10235.25 10386.39 10530.31 10826.64 3000 1

y_tilde[5] 10378.64 4.05 221.95 9932.72 10232.54 10373.66 10529.40 10808.04 3000 1

lp__ -700.97 0.03 1.04 -703.71 -701.34 -700.67 -700.24 -699.97 904 1

Samples were drawn using NUTS(diag_e) at Mon May 30 23:58:45 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

3.5.4 Assess fit

## layout(matrix(c(1,2,3,3), byrow=TRUE, nc=2)) traceplot(model$fit(), inc_warmup=FALSE)

pairs(model$fit())

par(mfrow=c(1,2)) hist(extract(model$fit())[["theta"]], breaks=50, main="theta", xlab="theta") hist(extract(model$fit())[["sigma"]], breaks=50, main="sigma", xlab="sigma")

3.5.5 Simulate from fit (mean-posterior predictive fit)

We can get the posterior mean in one of two ways:

(theta<- get_posterior_mean(model$fit(), par="theta")[, "mean-all chains"]) (sigma<- get_posterior_mean(model$fit(), par="sigma")[, "mean-all chains"])

[1] 10379.71 [1] 219.3105

(theta<- mean(extract(model$fit())[["theta"]])) (sigma<- mean(extract(model$fit())[["sigma"]]))

[1] 10379.71 [1] 219.3105

and then simulate from the posterior mean (this is a “posterior predictive fit"—not a forecast!):

theta<- mean(extract(model$fit())[["theta"]]) sigma<- mean(extract(model$fit())[["sigma"]]) y.sim<- rnorm(model$T(), mean=theta, sd=sigma) plot(model$y(), col="steelblue4", lwd=2.5, type="o") lines(y.sim, type="o", col="blue")

3.5.6 Forecast

model$fit()

Inference for Stan model: mean-only_normal_predict_new_generated_quantities_mc.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta 10379.71 0.46 20.31 10339.12 10366.58 10379.96 10393.80 10418.62 1950 1

sigma 219.31 0.35 14.46 193.32 209.35 218.74 228.57 249.14 1667 1

y_tilde[1] 10373.81 4.12 225.76 9933.51 10221.13 10375.88 10520.34 10818.54 3000 1

y_tilde[2] 10373.10 3.99 218.41 9942.35 10221.92 10377.23 10523.32 10801.63 3000 1

y_tilde[3] 10382.18 4.21 223.86 9940.05 10234.29 10384.17 10529.67 10823.47 2823 1

y_tilde[4] 10383.65 4.08 223.68 9931.30 10235.25 10386.39 10530.31 10826.64 3000 1

y_tilde[5] 10378.64 4.05 221.95 9932.72 10232.54 10373.66 10529.40 10808.04 3000 1

lp__ -700.97 0.03 1.04 -703.71 -701.34 -700.67 -700.24 -699.97 904 1

Samples were drawn using NUTS(diag_e) at Mon May 30 23:58:45 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

model$plot_y_tilde()

3.6 Mean-varying model, Normal shocks (a.k.a. “local-level model”, “random walk plus noise”)

3.6.1 Create model

model<- make_model( sheet="B.19-DROWN", model="mean-varying_normal", T_new=NULL, force=FALSE )

SAMPLING FOR MODEL 'mean-varying_normal' NOW (CHAIN 1).

Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.09298 seconds (Warm-up)

# 0.078214 seconds (Sampling)

# 0.171194 seconds (Total)

#

SAMPLING FOR MODEL 'mean-varying_normal' NOW (CHAIN 2).

Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.086007 seconds (Warm-up)

# 0.074769 seconds (Sampling)

# 0.160776 seconds (Total)

#

SAMPLING FOR MODEL 'mean-varying_normal' NOW (CHAIN 3).

Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)#

# Elapsed Time: 0.086661 seconds (Warm-up)

# 0.0899 seconds (Sampling)

# 0.176561 seconds (Total)

#

The following numerical problems occured the indicated number of times after warmup on chain 3

count

Exception thrown at line 14: normal_log: Scale parameter is 0, but must be > 0! 1

When a numerical problem occurs, the Metropolis proposal gets rejected.

However, by design Metropolis proposals sometimes get rejected even when there are no numerical problems.

Thus, if the number in the 'count' column is small, do not ask about this message on stan-users.

plot_ts(sheet=model$sheet(), fmla=`Drowning Rate (per 100k)` ~ `Year`)

3.6.2 Show Stan code

model$show_model_file()

data {

int<lower=1> T;

vector[T] y;

}

parameters {

vector[T] theta_1;

real<lower=0> sigma_1;

real<lower=0> sigma_2;

}

model {

for (t in 1:T) {

y[t] ~ normal(theta_1[t], sigma_1);

}

for (t in 2:T) {

theta_1[t] ~ normal(theta_1[t-1], sigma_2);

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_2^2) \\[3mm] &\Leftrightarrow& \\ y_t &=& \theta_{1,t} + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \, \sigma_1^2) \;\;\left\{ \begin{array}{l} \mbox{} \\ \mbox{observation / measurement equation} \\ \mbox{} \end{array} \right. \\ \theta_{1,t+1} &=& \theta_{1,t} + \eta_t, \;\;\;\;\; \eta_t \;\sim\; \mathscr{N}(0, \, \sigma_2^2) \;\;\left\{ \begin{array}{l} \mbox{} \\ \mbox{state equation} \\ \mbox{} \end{array} \right. \end{eqnarray}3.6.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-varying_normal.

3 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta_1[1] 18.91 0.03 1.21 16.56 18.12 18.89 19.69 21.30 1928 1.00

theta_1[2] 18.05 0.03 1.13 15.86 17.30 18.05 18.76 20.28 1384 1.00

theta_1[3] 18.86 0.03 1.05 16.76 18.15 18.88 19.56 20.95 1575 1.00

theta_1[4] 19.12 0.03 1.10 16.91 18.41 19.09 19.84 21.31 1355 1.00

theta_1[5] 18.98 0.03 1.17 16.75 18.20 18.95 19.76 21.34 1180 1.01

theta_1[6] 16.85 0.02 1.09 14.71 16.12 16.88 17.63 18.86 1929 1.00

theta_1[7] 16.37 0.02 1.07 14.15 15.66 16.38 17.09 18.37 1895 1.00

theta_1[8] 16.56 0.02 1.02 14.52 15.89 16.61 17.24 18.52 2443 1.00

theta_1[9] 16.89 0.03 1.07 14.83 16.17 16.87 17.62 19.01 1687 1.00

theta_1[10] 16.09 0.02 1.04 14.13 15.41 16.10 16.75 18.15 3000 1.00

theta_1[11] 16.04 0.02 1.02 14.06 15.38 16.02 16.71 18.15 2381 1.00

theta_1[12] 16.52 0.04 1.28 14.18 15.64 16.48 17.32 19.26 911 1.01

theta_1[13] 14.54 0.02 1.02 12.56 13.85 14.54 15.20 16.59 2385 1.00

theta_1[14] 13.16 0.02 1.01 11.21 12.48 13.09 13.85 15.24 2162 1.00

theta_1[15] 11.82 0.03 1.08 9.65 11.10 11.81 12.52 13.95 1482 1.00

theta_1[16] 11.57 0.03 1.02 9.61 10.89 11.59 12.22 13.61 1431 1.00

theta_1[17] 11.40 0.03 1.06 9.35 10.68 11.41 12.12 13.51 1278 1.00

theta_1[18] 9.87 0.03 1.09 7.71 9.15 9.88 10.60 11.91 1117 1.00

theta_1[19] 9.60 0.03 1.03 7.56 8.90 9.63 10.27 11.61 1389 1.00

theta_1[20] 9.75 0.03 1.09 7.60 9.02 9.75 10.47 11.85 1572 1.00

theta_1[21] 8.01 0.03 1.12 5.75 7.26 8.03 8.76 10.19 1297 1.00

theta_1[22] 8.15 0.02 1.05 6.10 7.47 8.17 8.81 10.25 2234 1.00

theta_1[23] 7.80 0.02 1.03 5.73 7.11 7.81 8.49 9.81 1869 1.00

theta_1[24] 8.02 0.02 1.03 5.99 7.35 8.00 8.69 10.00 2012 1.00

theta_1[25] 8.30 0.02 1.01 6.35 7.61 8.27 8.96 10.30 1876 1.00

theta_1[26] 8.87 0.03 1.03 6.86 8.17 8.88 9.57 10.94 1414 1.00

theta_1[27] 8.66 0.02 1.03 6.55 8.02 8.67 9.33 10.67 1999 1.00

theta_1[28] 8.73 0.02 1.06 6.63 8.03 8.73 9.42 10.87 2049 1.00

theta_1[29] 8.56 0.02 1.06 6.53 7.87 8.58 9.24 10.66 1924 1.00

theta_1[30] 7.41 0.02 1.03 5.42 6.73 7.37 8.09 9.51 2118 1.00

theta_1[31] 7.37 0.02 0.98 5.47 6.72 7.35 8.01 9.31 2327 1.00

theta_1[32] 7.46 0.02 1.03 5.47 6.78 7.43 8.14 9.49 2153 1.00

theta_1[33] 6.43 0.02 1.00 4.49 5.75 6.45 7.09 8.41 2152 1.00

theta_1[34] 5.97 0.02 1.06 3.93 5.26 5.96 6.67 8.10 1834 1.00

theta_1[35] 5.65 0.03 1.28 3.14 4.80 5.63 6.51 8.14 2249 1.00

sigma_1 1.64 0.01 0.34 0.98 1.42 1.63 1.85 2.32 695 1.01

sigma_2 1.44 0.02 0.44 0.81 1.12 1.37 1.68 2.50 502 1.01

lp__ -60.01 0.35 7.26 -75.43 -64.44 -59.57 -55.13 -46.58 425 1.00

Samples were drawn using NUTS(diag_e) at Mon May 30 23:59:22 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

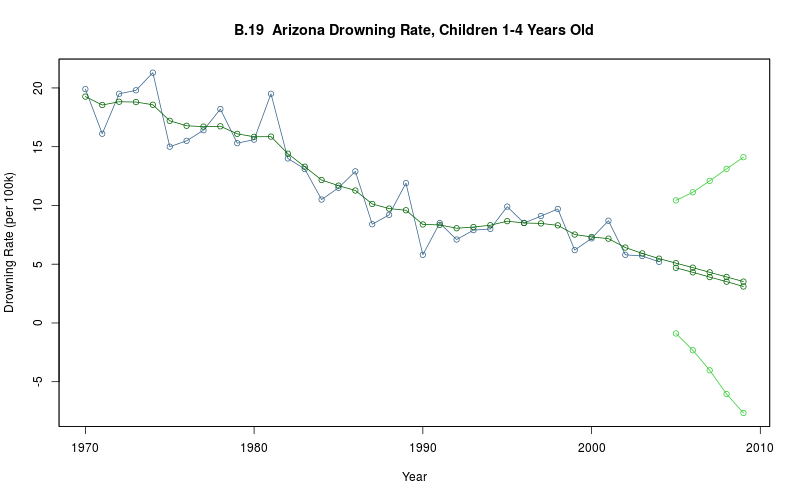

theta_1<- model$extract_par("theta_1", start=1, end=model$T()) sigma_1<- mean(extract(model$fit())[["sigma_1"]]) sigma_2<- mean(extract(model$fit())[["sigma_2"]]) plot_ts(sheet="B.19-DROWN", fmla=`Drowning Rate (per 100k)` ~ `Year`) lines(theta_1_mean ~ Year, data=theta_1, col="darkgreen", type="o")

3.7 Mean-varying model, Normal shocks (a.k.a. “local-level model”, “random walk plus noise”), predict ahead

3.7.1 Create model

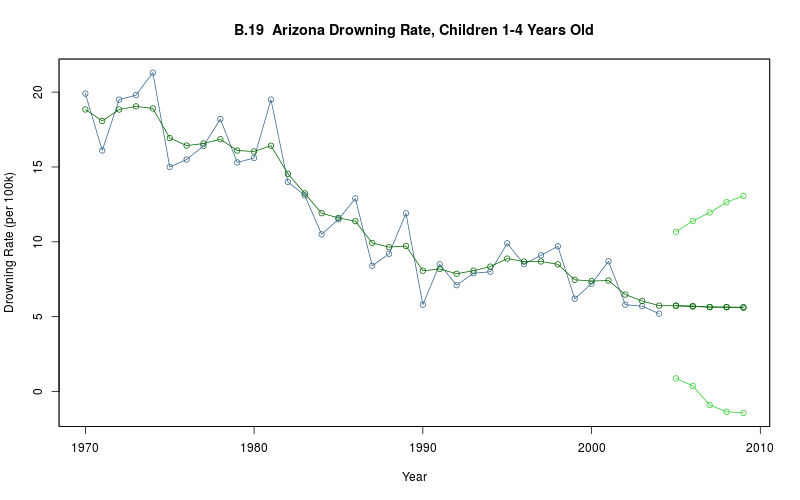

model<- make_model( sheet="B.19-DROWN", model="mean-varying_normal_predict_new", T_new=5, force=FALSE )

SAMPLING FOR MODEL 'mean-varying_normal_predict_new' NOW (CHAIN 1). Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.126455 seconds (Warm-up) # 0.117514 seconds (Sampling) # 0.243969 seconds (Total) # SAMPLING FOR MODEL 'mean-varying_normal_predict_new' NOW (CHAIN 2). Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.145055 seconds (Warm-up) # 0.107148 seconds (Sampling) # 0.252203 seconds (Total) # SAMPLING FOR MODEL 'mean-varying_normal_predict_new' NOW (CHAIN 3). Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.14014 seconds (Warm-up) # 0.086738 seconds (Sampling) # 0.226878 seconds (Total) # Warning messages: 1: There were 1 divergent transitions after warmup. Increasing adapt_delta above 0.8 may help. 2: Examine the pairs() plot to diagnose sampling problems

plot_ts(sheet=model$sheet(), fmla=`Drowning Rate (per 100k)` ~ `Year`)

3.7.2 Show Stan code

model$show_model_file()

data {

int<lower=1> T;

int<lower=1> T_new;

vector[T] y;

}

transformed data {

int<lower=T> T_start;

int<lower=T> T_end;

T_start<- T+1;

T_end<- T+T_new;

}

parameters {

real<lower=0> sigma_1;

real<lower=0> sigma_2;

vector[T+T_new] theta_1;

}

model {

for(t in 1:T) {

y[t] ~ normal(theta_1[t], sigma_1);

}

for(t in 2:T_end) {

theta_1[t] ~ normal(theta_1[t-1], sigma_2);

}

}

generated quantities {

vector[T_new] y_tilde;

for (t in T_start:T_end) {

y_tilde[t-T]<- normal_rng(theta_1[t], sigma_1);

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_2^2) \\[3mm] &\Leftrightarrow& \\ y_t &=& \theta_{1,t} + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &=& \theta_{1,t} + \eta_t, \;\;\;\;\; \eta_t \;\sim\; \mathscr{N}(0, \, \sigma_2^2) \end{eqnarray}3.7.3 Fitted Stan model

model$fit()

model$plot_y_tilde() theta_1<- model$extract_par("theta_1", start=1, end=model$T_end()) lines(theta_1_mean ~ Year, data=theta_1, col="darkgreen", type="o")

3.8 Mean-varying, linear-trend model, Normal shocks, predict ahead (a.k.a. “local linear-trend model”)

3.8.1 Create model

model<- make_model( sheet="B.19-DROWN", model="mean-varying_linear-trend_normal", T_new=5, iter=5000, force=FALSE )

SAMPLING FOR MODEL 'mean-varying_linear-trend_normal' NOW (CHAIN 1). Chain 1, Iteration: 1 / 5000 [ 0%] (Warmup) Chain 1, Iteration: 500 / 5000 [ 10%] (Warmup) Chain 1, Iteration: 1000 / 5000 [ 20%] (Warmup) Chain 1, Iteration: 1500 / 5000 [ 30%] (Warmup) Chain 1, Iteration: 2000 / 5000 [ 40%] (Warmup) Chain 1, Iteration: 2500 / 5000 [ 50%] (Warmup) Chain 1, Iteration: 2501 / 5000 [ 50%] (Sampling) Chain 1, Iteration: 3000 / 5000 [ 60%] (Sampling) Chain 1, Iteration: 3500 / 5000 [ 70%] (Sampling) Chain 1, Iteration: 4000 / 5000 [ 80%] (Sampling) Chain 1, Iteration: 4500 / 5000 [ 90%] (Sampling) Chain 1, Iteration: 5000 / 5000 [100%] (Sampling)# # Elapsed Time: 1.89458 seconds (Warm-up) # 1.55528 seconds (Sampling) # 3.44986 seconds (Total) # SAMPLING FOR MODEL 'mean-varying_linear-trend_normal' NOW (CHAIN 2). Chain 2, Iteration: 1 / 5000 [ 0%] (Warmup) Chain 2, Iteration: 500 / 5000 [ 10%] (Warmup) Chain 2, Iteration: 1000 / 5000 [ 20%] (Warmup) Chain 2, Iteration: 1500 / 5000 [ 30%] (Warmup) Chain 2, Iteration: 2000 / 5000 [ 40%] (Warmup) Chain 2, Iteration: 2500 / 5000 [ 50%] (Warmup) Chain 2, Iteration: 2501 / 5000 [ 50%] (Sampling) Chain 2, Iteration: 3000 / 5000 [ 60%] (Sampling) Chain 2, Iteration: 3500 / 5000 [ 70%] (Sampling) Chain 2, Iteration: 4000 / 5000 [ 80%] (Sampling) Chain 2, Iteration: 4500 / 5000 [ 90%] (Sampling) Chain 2, Iteration: 5000 / 5000 [100%] (Sampling)# # Elapsed Time: 2.31836 seconds (Warm-up) # 6.79005 seconds (Sampling) # 9.10842 seconds (Total) # SAMPLING FOR MODEL 'mean-varying_linear-trend_normal' NOW (CHAIN 3). Chain 3, Iteration: 1 / 5000 [ 0%] (Warmup) Chain 3, Iteration: 500 / 5000 [ 10%] (Warmup) Chain 3, Iteration: 1000 / 5000 [ 20%] (Warmup) Chain 3, Iteration: 1500 / 5000 [ 30%] (Warmup) Chain 3, Iteration: 2000 / 5000 [ 40%] (Warmup) Chain 3, Iteration: 2500 / 5000 [ 50%] (Warmup) Chain 3, Iteration: 2501 / 5000 [ 50%] (Sampling) Chain 3, Iteration: 3000 / 5000 [ 60%] (Sampling) Chain 3, Iteration: 3500 / 5000 [ 70%] (Sampling) Chain 3, Iteration: 4000 / 5000 [ 80%] (Sampling) Chain 3, Iteration: 4500 / 5000 [ 90%] (Sampling) Chain 3, Iteration: 5000 / 5000 [100%] (Sampling)# # Elapsed Time: 1.95461 seconds (Warm-up) # 1.16863 seconds (Sampling) # 3.12324 seconds (Total) # Warning messages: 1: There were 143 divergent transitions after warmup. Increasing adapt_delta above 0.8 may help. 2: Examine the pairs() plot to diagnose sampling problems

plot_ts(sheet=model$sheet(), fmla=`Drowning Rate (per 100k)` ~ `Year`)

3.8.2 Show Stan code

model$show_model_file()

data {

int<lower=1> T;

int<lower=1> T_new;

vector[T] y;

}

transformed data {

int<lower=T> T_start;

int<lower=T> T_end;

T_start<- T+1;

T_end<- T+T_new;

}

parameters {

real<lower=0> sigma_1;

real<lower=0> sigma_2;

real<lower=0> sigma_3;

vector[T+T_new] theta_1;

vector[T+T_new] theta_2;

}

model {

for(t in 1:T) {

y[t] ~ normal(theta_1[t], sigma_1);

}

for(t in 2:T_end) {

theta_1[t] ~ normal(theta_1[t-1] + theta_2[t], sigma_2);

theta_2[t] ~ normal(theta_2[t-1], sigma_3);

}

}

generated quantities {

vector[T_new] y_tilde;

for (t in T_start:T_end) {

y_tilde[t-T]<- normal_rng(theta_1[t] + theta_2[t], sigma_1);

}

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &\sim& \mathscr{N}(\theta_{1,t} + \theta_{2,t}, \, \sigma_2^2) \\[3mm] \theta_{2,t+1} &\sim& \mathscr{N}(\theta_{2,t}, \, \sigma_2^2) \\[3mm] &\Leftrightarrow& \\ y_t &=& \theta_{1,t} + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &=& \theta_{1,t} + \theta_{2,t} + \eta_t, \;\;\;\;\; \eta_t \;\sim\; \mathscr{N}(0, \, \sigma_2^2) \\[3mm] \theta_{2,t+1} &=& \theta_{2,t} + \xi_t, \;\;\;\;\; \xi_t \;\sim\; \mathscr{N}(0, \, \sigma_3^2) \end{eqnarray}3.8.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-varying_linear-trend_normal.

3 chains, each with iter=5000; warmup=2500; thin=1;

post-warmup draws per chain=2500, total post-warmup draws=7500.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

sigma_1 1.75 0.03 0.41 0.59 1.57 1.77 1.97 2.47 211 1.02

sigma_2 0.93 0.05 0.59 0.19 0.53 0.81 1.17 2.58 144 1.05

sigma_3 0.22 0.02 0.17 0.02 0.09 0.17 0.29 0.66 115 1.04

theta_1[1] 19.26 0.03 1.24 16.79 18.45 19.27 20.06 21.76 2073 1.00

theta_1[2] 18.55 0.06 1.17 16.11 17.81 18.62 19.33 20.76 394 1.01

theta_1[3] 18.82 0.03 0.99 16.94 18.18 18.85 19.47 20.71 915 1.00

theta_1[4] 18.80 0.04 1.02 16.84 18.13 18.78 19.46 20.83 674 1.00

theta_1[5] 18.57 0.06 1.12 16.61 17.82 18.46 19.19 21.20 335 1.01

theta_1[6] 17.20 0.05 1.03 14.99 16.57 17.29 17.90 19.07 403 1.02

theta_1[7] 16.78 0.04 0.96 14.86 16.15 16.84 17.44 18.55 595 1.01

theta_1[8] 16.71 0.02 0.89 14.94 16.13 16.70 17.29 18.44 2283 1.00

theta_1[9] 16.74 0.04 0.96 14.88 16.10 16.71 17.36 18.60 734 1.00

theta_1[10] 16.09 0.03 0.93 14.24 15.46 16.09 16.71 17.92 1261 1.00

theta_1[11] 15.84 0.03 0.95 14.01 15.21 15.83 16.44 17.80 1196 1.00

theta_1[12] 15.86 0.09 1.33 13.75 14.94 15.67 16.57 19.24 237 1.02

theta_1[13] 14.40 0.02 0.90 12.67 13.82 14.38 14.98 16.22 2199 1.00

theta_1[14] 13.30 0.02 0.89 11.53 12.73 13.30 13.88 15.02 2476 1.00

theta_1[15] 12.17 0.04 0.99 10.12 11.55 12.22 12.83 14.00 496 1.01

theta_1[16] 11.68 0.02 0.90 9.86 11.13 11.68 12.26 13.44 1408 1.00

theta_1[17] 11.27 0.04 0.96 9.36 10.65 11.26 11.88 13.21 715 1.00

theta_1[18] 10.13 0.05 1.00 8.12 9.48 10.17 10.82 12.03 448 1.01

theta_1[19] 9.73 0.03 0.91 7.93 9.14 9.74 10.36 11.56 945 1.00

theta_1[20] 9.59 0.05 1.02 7.66 8.90 9.56 10.21 11.83 483 1.01

theta_1[21] 8.38 0.07 1.11 5.89 7.72 8.46 9.15 10.39 294 1.02

theta_1[22] 8.34 0.03 0.92 6.52 7.73 8.38 8.97 10.11 1070 1.00

theta_1[23] 8.06 0.04 0.94 6.20 7.40 8.08 8.69 9.86 660 1.01

theta_1[24] 8.16 0.02 0.86 6.42 7.61 8.17 8.73 9.85 1878 1.00

theta_1[25] 8.32 0.02 0.86 6.63 7.76 8.31 8.87 10.04 2712 1.00

theta_1[26] 8.66 0.03 0.95 6.86 8.03 8.61 9.29 10.64 744 1.01

theta_1[27] 8.51 0.02 0.91 6.71 7.93 8.49 9.11 10.35 1586 1.00

theta_1[28] 8.47 0.03 0.94 6.64 7.85 8.44 9.08 10.40 1156 1.00

theta_1[29] 8.31 0.04 0.99 6.44 7.62 8.27 8.94 10.32 673 1.01

theta_1[30] 7.52 0.04 0.94 5.70 6.89 7.51 8.17 9.36 695 1.00

theta_1[31] 7.32 0.02 0.90 5.58 6.72 7.30 7.91 9.10 1724 1.00

theta_1[32] 7.17 0.03 0.94 5.37 6.54 7.16 7.79 9.03 847 1.01

theta_1[33] 6.41 0.02 0.93 4.59 5.79 6.41 7.03 8.26 2012 1.00

theta_1[34] 5.92 0.03 1.02 3.94 5.26 5.89 6.56 7.98 1614 1.00

theta_1[35] 5.47 0.04 1.23 3.05 4.66 5.47 6.23 7.87 1076 1.00

theta_1[36] 5.09 0.06 1.91 1.16 3.96 5.11 6.25 8.87 1206 1.00

theta_1[37] 4.71 0.08 2.53 -0.42 3.20 4.77 6.23 9.80 1030 1.00

theta_1[38] 4.31 0.10 3.18 -2.11 2.43 4.42 6.12 10.75 958 1.00

theta_1[39] 3.92 0.12 3.86 -4.04 1.70 4.04 6.09 11.85 962 1.00

theta_1[40] 3.52 0.15 4.53 -5.81 0.94 3.62 6.04 12.62 971 1.00

theta_2[1] -0.38 0.02 0.61 -1.61 -0.67 -0.39 -0.09 0.88 1200 1.00

theta_2[2] -0.38 0.02 0.55 -1.52 -0.64 -0.39 -0.10 0.76 961 1.00

theta_2[3] -0.35 0.02 0.49 -1.33 -0.60 -0.37 -0.11 0.65 835 1.00

theta_2[4] -0.37 0.02 0.45 -1.28 -0.61 -0.38 -0.13 0.54 832 1.00

theta_2[5] -0.41 0.01 0.42 -1.32 -0.62 -0.40 -0.18 0.40 1222 1.00

theta_2[6] -0.46 0.01 0.42 -1.39 -0.64 -0.44 -0.23 0.27 1191 1.00

theta_2[7] -0.44 0.01 0.39 -1.30 -0.62 -0.43 -0.23 0.29 1320 1.00

theta_2[8] -0.42 0.01 0.38 -1.25 -0.61 -0.42 -0.22 0.33 1413 1.00

theta_2[9] -0.41 0.01 0.38 -1.18 -0.60 -0.42 -0.22 0.36 1534 1.00

theta_2[10] -0.44 0.01 0.37 -1.20 -0.62 -0.44 -0.25 0.29 1561 1.00

theta_2[11] -0.47 0.01 0.37 -1.22 -0.65 -0.47 -0.29 0.28 1486 1.00

theta_2[12] -0.52 0.01 0.36 -1.27 -0.69 -0.51 -0.33 0.21 1541 1.00

theta_2[13] -0.60 0.02 0.39 -1.48 -0.79 -0.57 -0.39 0.09 632 1.01

theta_2[14] -0.62 0.02 0.40 -1.53 -0.82 -0.58 -0.40 0.08 503 1.01

theta_2[15] -0.62 0.02 0.40 -1.49 -0.81 -0.58 -0.39 0.08 522 1.01

theta_2[16] -0.58 0.01 0.38 -1.40 -0.77 -0.55 -0.37 0.14 804 1.01

theta_2[17] -0.55 0.01 0.37 -1.36 -0.73 -0.52 -0.34 0.15 830 1.00

theta_2[18] -0.53 0.01 0.37 -1.35 -0.72 -0.51 -0.33 0.15 937 1.00

theta_2[19] -0.49 0.01 0.37 -1.26 -0.67 -0.47 -0.29 0.22 1514 1.00

theta_2[20] -0.45 0.01 0.36 -1.20 -0.63 -0.44 -0.26 0.26 1607 1.00

theta_2[21] -0.42 0.01 0.36 -1.21 -0.60 -0.41 -0.23 0.28 1672 1.00

theta_2[22] -0.35 0.01 0.36 -1.10 -0.54 -0.36 -0.16 0.38 1497 1.00

theta_2[23] -0.29 0.01 0.37 -0.99 -0.50 -0.32 -0.11 0.52 1188 1.00

theta_2[24] -0.24 0.01 0.39 -0.98 -0.45 -0.28 -0.05 0.62 753 1.00

theta_2[25] -0.21 0.02 0.40 -0.93 -0.44 -0.25 0.00 0.69 555 1.01

theta_2[26] -0.20 0.02 0.40 -0.93 -0.43 -0.24 0.01 0.70 532 1.01

theta_2[27] -0.23 0.01 0.39 -0.96 -0.45 -0.26 -0.03 0.66 706 1.00

theta_2[28] -0.25 0.01 0.38 -0.99 -0.46 -0.27 -0.05 0.56 1033 1.00

theta_2[29] -0.29 0.01 0.38 -1.04 -0.50 -0.31 -0.09 0.49 1572 1.00

theta_2[30] -0.34 0.01 0.38 -1.11 -0.54 -0.34 -0.12 0.43 1658 1.00

theta_2[31] -0.34 0.01 0.38 -1.12 -0.55 -0.35 -0.14 0.45 1712 1.00

theta_2[32] -0.36 0.01 0.41 -1.19 -0.58 -0.36 -0.14 0.45 1563 1.00

theta_2[33] -0.39 0.01 0.43 -1.31 -0.62 -0.39 -0.16 0.48 1086 1.00

theta_2[34] -0.40 0.01 0.47 -1.38 -0.65 -0.38 -0.15 0.54 1012 1.00

theta_2[35] -0.40 0.02 0.53 -1.53 -0.67 -0.39 -0.12 0.63 894 1.00

theta_2[36] -0.40 0.02 0.59 -1.70 -0.67 -0.38 -0.10 0.82 884 1.00

theta_2[37] -0.40 0.02 0.65 -1.82 -0.70 -0.38 -0.07 0.94 841 1.00

theta_2[38] -0.40 0.02 0.70 -1.93 -0.71 -0.38 -0.06 1.02 902 1.00

theta_2[39] -0.40 0.02 0.76 -2.04 -0.73 -0.38 -0.03 1.16 990 1.00

theta_2[40] -0.40 0.02 0.80 -2.16 -0.74 -0.38 -0.04 1.22 1216 1.00

y_tilde[1] 4.69 0.07 2.89 -1.03 2.80 4.71 6.58 10.43 1677 1.00

y_tilde[2] 4.31 0.10 3.47 -2.46 2.12 4.30 6.49 11.12 1186 1.00

y_tilde[3] 3.90 0.12 4.07 -4.23 1.39 3.99 6.42 12.09 1124 1.00

y_tilde[4] 3.52 0.15 4.79 -6.31 0.69 3.62 6.33 13.11 1035 1.00

y_tilde[5] 3.10 0.17 5.46 -8.12 -0.03 3.22 6.24 14.11 1023 1.00

lp__ 8.09 8.11 36.07 -57.31 -18.12 7.56 31.88 81.88 20 1.09

Samples were drawn using NUTS(diag_e) at Tue May 31 00:00:44 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

model$plot_y_tilde()

model$plot_y_tilde()

## TODO: put this into the model:

theta_1<- model$extract_par("theta_1", start=1, end=model$T_end())

lines(theta_1_mean ~ Year, data=theta_1, col="darkgreen", type="o")

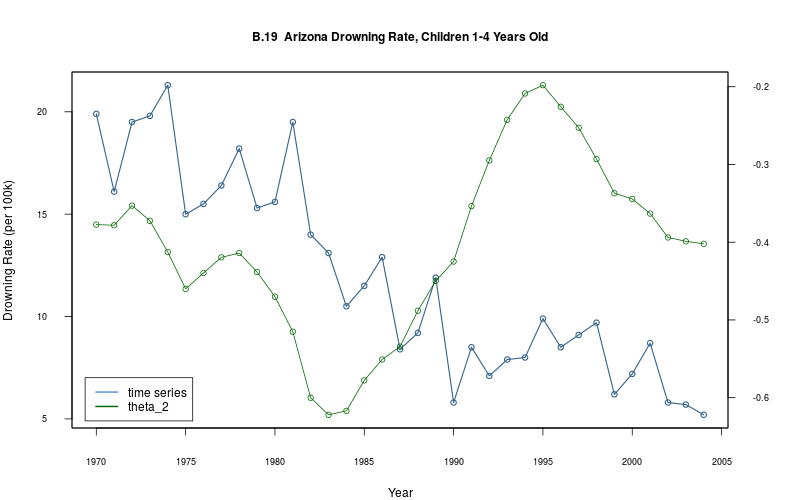

theta_2<- model$extract_par("theta_2", start=1, end=model$T_end()) par(mar=c(5,4,4,5)+.1) plot_ts(model$sheet(), fmla=`Drowning Rate (per 100k)` ~ `Year`) par(new=TRUE) plot( theta_2_mean ~ Year, data=theta_2 %>% filter(Year <= (get_t(model$sheet()) %>% max)), col="darkgreen", type="o", xaxt="n", yaxt="n", xlab="", ylab="" ) axis(4) # mtext("theta_2", side=4, line=3) legend("bottomleft", col=c("steelblue3","darkgreen"), lwd=2, legend=c("time series","theta_2"), inset=0.02)

3.9 Mean-varying with seasonal, Normal shocks (a.k.a. “local model with stochastic seasonal”)

3.9.1 Create model

model<- make_model( sheet="B.5-BEV", model="mean-varying_seasonal_normal", T_new=NULL, seasonal=12, iter=5000, force=FALSE )

SAMPLING FOR MODEL 'mean-varying_seasonal_normal' NOW (CHAIN 1).

Chain 1, Iteration: 1 / 5000 [ 0%] (Warmup)

Chain 1, Iteration: 500 / 5000 [ 10%] (Warmup)

Chain 1, Iteration: 1000 / 5000 [ 20%] (Warmup)

Chain 1, Iteration: 1500 / 5000 [ 30%] (Warmup)

Chain 1, Iteration: 2000 / 5000 [ 40%] (Warmup)

Chain 1, Iteration: 2500 / 5000 [ 50%] (Warmup)

Chain 1, Iteration: 2501 / 5000 [ 50%] (Sampling)

Chain 1, Iteration: 3000 / 5000 [ 60%] (Sampling)

Chain 1, Iteration: 3500 / 5000 [ 70%] (Sampling)

Chain 1, Iteration: 4000 / 5000 [ 80%] (Sampling)

Chain 1, Iteration: 4500 / 5000 [ 90%] (Sampling)

Chain 1, Iteration: 5000 / 5000 [100%] (Sampling)#

# Elapsed Time: 50.1348 seconds (Warm-up)

# 228.302 seconds (Sampling)

# 278.437 seconds (Total)

#

SAMPLING FOR MODEL 'mean-varying_seasonal_normal' NOW (CHAIN 2).

Chain 2, Iteration: 1 / 5000 [ 0%] (Warmup)

Chain 2, Iteration: 500 / 5000 [ 10%] (Warmup)

Chain 2, Iteration: 1000 / 5000 [ 20%] (Warmup)

Chain 2, Iteration: 1500 / 5000 [ 30%] (Warmup)

Chain 2, Iteration: 2000 / 5000 [ 40%] (Warmup)

Chain 2, Iteration: 2500 / 5000 [ 50%] (Warmup)

Chain 2, Iteration: 2501 / 5000 [ 50%] (Sampling)

Chain 2, Iteration: 3000 / 5000 [ 60%] (Sampling)

Chain 2, Iteration: 3500 / 5000 [ 70%] (Sampling)

Chain 2, Iteration: 4000 / 5000 [ 80%] (Sampling)

Chain 2, Iteration: 4500 / 5000 [ 90%] (Sampling)

Chain 2, Iteration: 5000 / 5000 [100%] (Sampling)#

# Elapsed Time: 68.0167 seconds (Warm-up)

# 17.2331 seconds (Sampling)

# 85.2498 seconds (Total)

#

SAMPLING FOR MODEL 'mean-varying_seasonal_normal' NOW (CHAIN 3).

Chain 3, Iteration: 1 / 5000 [ 0%] (Warmup)

Chain 3, Iteration: 500 / 5000 [ 10%] (Warmup)

Chain 3, Iteration: 1000 / 5000 [ 20%] (Warmup)

Chain 3, Iteration: 1500 / 5000 [ 30%] (Warmup)

Chain 3, Iteration: 2000 / 5000 [ 40%] (Warmup)

Chain 3, Iteration: 2500 / 5000 [ 50%] (Warmup)

Chain 3, Iteration: 2501 / 5000 [ 50%] (Sampling)

Chain 3, Iteration: 3000 / 5000 [ 60%] (Sampling)

Chain 3, Iteration: 3500 / 5000 [ 70%] (Sampling)

Chain 3, Iteration: 4000 / 5000 [ 80%] (Sampling)

Chain 3, Iteration: 4500 / 5000 [ 90%] (Sampling)

Chain 3, Iteration: 5000 / 5000 [100%] (Sampling)#

# Elapsed Time: 172.868 seconds (Warm-up)

# 31.8761 seconds (Sampling)

# 204.744 seconds (Total)

#

The following numerical problems occured the indicated number of times after warmup on chain 3

count

Exception thrown at line 51: normal_log: Scale parameter is 0, but must be > 0! 1

When a numerical problem occurs, the Metropolis proposal gets rejected.

However, by design Metropolis proposals sometimes get rejected even when there are no numerical problems.

Thus, if the number in the 'count' column is small, do not ask about this message on stan-users.

Warning messages:

1: There were 2 divergent transitions after warmup. Increasing adapt_delta above 0.8 may help.

2: There were 694 transitions after warmup that exceeded the maximum treedepth. Increase max_treedepth above 10.

3: Examine the pairs() plot to diagnose sampling problems

plot_ts(sheet=model$sheet(), fmla=`USD (millions)` ~ `Month`)

3.9.2 Show Stan code

model$show_model_file()

functions {

real sum_constraint(vector x, int t, int Lambda) {

int t_start;

int t_end;

real s;

s<- 0;

// wind back from: (t-1) to: t-(Lambda-1)

t_start<- t-1;

t_end<- t-(Lambda-1);

for (i in t_start:t_end) {

s<- s - x[i];

}

return s;

}

}

data {

int<lower=12> T;

vector[T] y;

int<lower=12,upper=12> Lambda;

}

parameters {

vector[T] theta_1;

vector[T] s;

real<lower=0> sigma_1;

real<lower=0> sigma_2;

real<lower=0> sigma_3;

}

transformed parameters {

vector[T] ss;

for (t in 1:(Lambda-1)) {

ss[t]<- 0;

}

for (t in Lambda:T) {

ss[t]<- 0;

for (lambda in 1:(Lambda-1)) {

ss[t]<- ss[t] - s[t-lambda];

}

}

}

model {

for (t in 1:T) {

y[t] ~ normal(theta_1[t] + s[t], sigma_1);

}

for (t in 2:T) {

theta_1[t] ~ normal(theta_1[t-1], sigma_2);

}

for (t in Lambda:T) {

// s[t] ~ normal(-s[t-1]-s[t-2]-s[t-3]-s[t-4]-s[t-5]-s[t-6]-s[t-7]-s[t-8]-s[t-9]-s[t-10]-s[t-11], sigma_3);

s[t] ~ normal(ss[t], sigma_3);

// s[t] ~ normal(sum_constraint(s, t, Lambda), sigma_3);

}

// priors on initial values

theta_1[1] ~ normal(y[1], sigma_2);

// hyperparameters

sigma_1 ~ inv_gamma(0.001, 0.001);

sigma_2 ~ inv_gamma(0.001, 0.001);

sigma_3 ~ inv_gamma(0.001, 0.001);

}

cf. the model itself:

\begin{eqnarray} y_t &\sim& \mathscr{N}(\theta_{1,t} + s_t, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &\sim& \mathscr{N}(\theta_{1,t}, \, \sigma_2^2) \\[3mm] s_{t+1} &\sim& \mathscr{N}(-\sum_{\lambda = 1}^{\lambda = \Lambda } s_{t-\lambda}, \, \sigma_3^2), \;\;\;\;\;\mbox{each season \(s=s_t\), for \(s=1,\ldots,\Lambda\), e.g. \(\Lambda=12\) for monthly data} \\[3mm] &\Leftrightarrow& \\[3mm] y_t &=& \theta_{1,t} + \varepsilon_t, \;\;\;\;\; \varepsilon_t \;\sim\; \mathscr{N}(0, \, \sigma_1^2) \\[3mm] \theta_{1,t+1} &=& \theta_{1,t} + \eta_t, \;\;\;\;\; \eta_t \;\sim\; \mathscr{N}(0, \, \sigma_2^2) \\[3mm] s_{t+1} &=& -\sum_{\lambda = 1}^{\lambda = \Lambda } s_{t-\lambda} + \xi_t, \;\;\;\;\; \xi_t \;\sim\; \mathscr{N}(0, \, \sigma_3^2) \end{eqnarray}3.9.3 Fitted Stan model

model$fit()

Inference for Stan model: mean-varying_seasonal_normal.

3 chains, each with iter=5000; warmup=2500; thin=1;

post-warmup draws per chain=2500, total post-warmup draws=7500.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

theta_1[1] 4008.90 3.33 68.46 3879.00 3963.33 4007.37 4053.16 4148.38 422 1.01

theta_1[2] 4241.07 4.92 65.13 4110.23 4198.42 4242.46 4284.83 4365.13 175 1.02

theta_1[3] 4346.13 7.33 62.15 4221.40 4304.67 4347.42 4387.92 4465.07 72 1.03

theta_1[4] 4319.13 5.91 59.69 4202.06 4279.84 4319.24 4358.53 4436.34 102 1.03

theta_1[5] 4261.42 1.55 57.64 4146.11 4223.29 4261.57 4299.97 4375.59 1378 1.01

theta_1[6] 4202.15 1.22 57.95 4086.95 4164.09 4201.91 4240.45 4313.40 2267 1.00

theta_1[7] 4186.82 1.47 57.69 4076.61 4147.72 4186.62 4225.48 4302.43 1536 1.00

theta_1[8] 4107.27 1.66 59.11 3989.43 4067.48 4106.70 4147.05 4224.39 1265 1.01

theta_1[9] 4167.03 1.21 57.89 4052.76 4127.59 4168.17 4205.54 4280.77 2298 1.00

theta_1[10] 4218.49 2.14 59.55 4100.47 4178.61 4219.02 4258.94 4335.92 775 1.01

theta_1[11] 4218.10 1.23 58.10 4102.94 4178.02 4218.73 4257.09 4330.89 2230 1.00

theta_1[12] 4236.93 1.18 58.28 4119.59 4198.62 4236.60 4275.43 4351.36 2460 1.00

theta_1[13] 4233.54 1.40 55.37 4127.70 4196.71 4232.35 4268.99 4347.13 1572 1.00

theta_1[14] 4200.80 1.92 57.47 4091.62 4161.92 4199.94 4238.47 4316.55 896 1.01

theta_1[15] 4137.17 2.12 55.42 4030.43 4099.77 4137.09 4173.09 4249.04 681 1.01

theta_1[16] 4168.29 1.21 53.36 4062.89 4133.77 4168.25 4203.54 4271.57 1951 1.00

theta_1[17] 4230.18 1.46 53.99 4125.68 4193.86 4230.22 4265.83 4335.93 1368 1.01

theta_1[18] 4256.77 1.23 53.52 4151.02 4221.07 4257.90 4292.61 4358.40 1892 1.00

theta_1[19] 4272.75 1.26 53.10 4169.17 4236.56 4272.96 4308.60 4377.15 1774 1.00

theta_1[20] 4307.60 1.37 52.93 4203.71 4271.99 4308.05 4343.19 4409.09 1487 1.01

theta_1[21] 4289.40 1.31 54.39 4181.36 4253.20 4290.22 4325.73 4395.32 1716 1.00

theta_1[22] 4201.35 1.86 58.01 4087.12 4162.26 4201.33 4240.74 4313.91 970 1.00

theta_1[23] 4284.57 1.21 53.08 4182.34 4248.68 4283.48 4320.12 4389.61 1936 1.00

theta_1[24] 4318.72 1.13 52.24 4218.80 4283.16 4318.13 4353.65 4422.17 2134 1.00

theta_1[25] 4303.10 2.24 52.74 4203.40 4267.59 4302.23 4337.20 4410.57 556 1.01

theta_1[26] 4398.17 1.70 54.21 4295.20 4360.90 4397.32 4433.29 4507.60 1016 1.00

theta_1[27] 4439.01 1.44 53.08 4334.42 4404.00 4438.15 4474.91 4542.69 1350 1.00

theta_1[28] 4422.95 1.15 51.83 4321.27 4387.79 4423.29 4458.40 4523.37 2046 1.00

theta_1[29] 4449.86 1.15 53.26 4345.69 4414.16 4450.64 4485.43 4551.73 2151 1.00

theta_1[30] 4512.33 1.66 52.98 4404.13 4476.41 4512.84 4548.99 4612.67 1021 1.01

theta_1[31] 4468.80 1.20 52.63 4363.61 4433.30 4469.71 4504.68 4569.59 1926 1.00

theta_1[32] 4444.95 2.74 53.63 4339.66 4408.71 4444.39 4480.16 4552.79 382 1.01

theta_1[33] 4431.14 1.63 53.07 4327.43 4394.89 4431.05 4467.45 4535.73 1058 1.01

theta_1[34] 4504.69 1.46 53.35 4397.58 4468.41 4505.63 4541.07 4607.67 1339 1.00

theta_1[35] 4547.98 1.36 52.95 4443.03 4512.83 4547.36 4582.60 4650.73 1513 1.00

theta_1[36] 4577.16 1.18 52.46 4474.84 4542.10 4576.27 4612.70 4679.29 1989 1.00

theta_1[37] 4525.41 1.76 52.57 4424.84 4490.70 4525.15 4559.15 4628.67 892 1.01

theta_1[38] 4540.57 1.61 52.83 4440.02 4504.01 4540.01 4575.44 4647.15 1072 1.00

theta_1[39] 4588.23 1.39 51.15 4487.09 4554.27 4588.13 4622.83 4689.01 1360 1.00

theta_1[40] 4585.21 1.04 50.85 4486.79 4550.46 4584.68 4619.72 4682.78 2403 1.00

theta_1[41] 4610.07 1.11 52.69 4509.05 4574.89 4609.00 4645.30 4715.14 2269 1.00

theta_1[42] 4665.27 1.10 51.38 4564.88 4630.37 4665.01 4700.11 4765.97 2164 1.00

theta_1[43] 4695.15 1.41 53.30 4591.38 4658.97 4696.36 4730.63 4800.40 1436 1.00

theta_1[44] 4867.66 3.20 57.73 4753.35 4829.46 4867.92 4906.14 4976.93 325 1.01

theta_1[45] 4805.04 1.80 53.66 4696.91 4769.89 4805.94 4841.61 4908.80 893 1.01

theta_1[46] 4768.78 1.22 51.18 4668.06 4734.46 4769.15 4803.26 4868.74 1760 1.00

theta_1[47] 4692.93 5.65 54.63 4590.93 4655.92 4691.18 4727.91 4803.29 94 1.03

theta_1[48] 4753.98 1.53 51.94 4651.95 4720.20 4753.04 4788.37 4858.22 1149 1.01

theta_1[49] 4845.77 1.87 53.04 4738.33 4811.95 4846.52 4881.30 4947.50 808 1.01

theta_1[50] 4813.01 1.36 52.81 4708.74 4777.92 4813.35 4847.38 4916.38 1507 1.00

theta_1[51] 4845.52 1.80 51.65 4747.08 4810.30 4844.43 4879.95 4949.95 824 1.01

theta_1[52] 4959.61 1.48 51.18 4858.49 4925.23 4959.20 4994.07 5061.76 1188 1.01

theta_1[53] 4992.80 1.49 52.83 4884.97 4958.34 4993.60 5028.18 5095.31 1256 1.01

theta_1[54] 5002.10 1.35 51.70 4899.06 4967.74 5002.10 5036.41 5102.83 1461 1.00

theta_1[55] 5066.15 1.67 52.97 4962.04 5030.02 5066.62 5101.89 5167.78 1005 1.01

theta_1[56] 4995.71 1.24 51.42 4897.03 4961.85 4994.90 5029.82 5097.74 1711 1.01

theta_1[57] 4988.76 1.34 51.32 4887.65 4953.67 4989.43 5022.94 5089.49 1464 1.00

theta_1[58] 4985.39 1.69 52.66 4883.16 4949.53 4985.65 5020.30 5087.72 975 1.00

theta_1[59] 5115.83 6.06 56.81 4999.64 5080.15 5116.50 5154.58 5223.75 88 1.03

theta_1[60] 5011.92 1.18 52.00 4910.00 4976.80 5012.13 5046.83 5113.29 1926 1.00

theta_1[61] 4892.95 6.52 54.59 4788.30 4855.82 4891.90 4928.96 5003.16 70 1.03

theta_1[62] 4933.63 1.58 50.92 4834.67 4899.13 4933.44 4967.22 5034.88 1045 1.00

theta_1[63] 5005.29 1.44 52.05 4901.21 4970.39 5005.50 5040.79 5107.81 1311 1.01

theta_1[64] 4997.17 1.12 50.83 4899.08 4962.56 4997.44 5030.28 5097.46 2066 1.00

theta_1[65] 5013.59 1.21 50.82 4914.42 4980.53 5013.90 5046.86 5113.82 1775 1.01

theta_1[66] 4992.03 1.34 52.31 4891.75 4956.28 4990.61 5027.30 5095.34 1522 1.00

theta_1[67] 5093.97 1.30 52.78 4992.44 5058.48 5093.65 5129.29 5200.75 1640 1.00

theta_1[68] 5114.16 1.09 50.11 5017.30 5081.60 5113.41 5146.78 5212.02 2098 1.00

theta_1[69] 5171.27 1.20 51.24 5070.44 5137.40 5171.83 5204.55 5274.13 1812 1.00

theta_1[70] 5264.91 1.83 53.89 5158.47 5228.95 5265.49 5300.91 5371.21 868 1.01

theta_1[71] 5223.99 1.27 51.68 5123.25 5189.22 5224.32 5258.95 5325.32 1643 1.00

theta_1[72] 5220.37 1.19 51.31 5117.30 5186.74 5220.73 5254.39 5320.65 1853 1.00

theta_1[73] 5239.72 1.76 51.07 5138.86 5206.25 5239.94 5273.95 5340.48 843 1.00

theta_1[74] 5220.09 1.36 50.86 5118.64 5187.42 5220.47 5253.92 5319.80 1398 1.00

theta_1[75] 5155.43 1.62 51.97 5054.58 5120.65 5155.38 5189.88 5259.63 1033 1.01

theta_1[76] 5186.90 1.40 50.83 5091.21 5152.45 5185.33 5220.59 5290.04 1314 1.00

theta_1[77] 5206.92 1.51 53.04 5100.13 5173.13 5207.76 5241.74 5312.39 1236 1.00

theta_1[78] 5281.74 1.54 51.90 5179.31 5246.93 5281.96 5316.28 5382.58 1131 1.01

theta_1[79] 5256.57 1.14 50.72 5153.88 5223.49 5256.82 5291.52 5356.78 1967 1.00

theta_1[80] 5241.61 1.23 50.61 5138.15 5207.83 5242.26 5275.56 5339.76 1686 1.00

theta_1[81] 5265.08 1.35 53.23 5159.07 5228.82 5266.08 5301.55 5366.23 1565 1.01

theta_1[82] 5178.00 1.87 52.91 5076.38 5143.26 5176.89 5212.15 5285.48 797 1.01

theta_1[83] 5053.38 5.17 54.96 4946.89 5016.81 5052.66 5089.94 5163.24 113 1.02

theta_1[84] 5101.88 1.26 50.59 5002.16 5067.69 5102.51 5136.98 5197.30 1618 1.00

theta_1[85] 5113.09 1.16 51.55 5012.69 5077.99 5113.19 5147.13 5215.27 1976 1.00

theta_1[86] 5131.33 1.42 51.47 5028.28 5097.38 5131.36 5166.27 5230.48 1319 1.00

theta_1[87] 5134.90 1.32 50.95 5036.15 5100.28 5134.02 5170.21 5235.04 1481 1.00

theta_1[88] 5149.68 1.43 52.41 5046.18 5116.17 5148.81 5184.72 5251.39 1349 1.00

theta_1[89] 5081.35 1.82 54.61 4969.43 5045.05 5081.44 5117.50 5186.30 897 1.00

theta_1[90] 5156.80 1.38 51.80 5058.02 5121.42 5155.95 5191.48 5259.56 1415 1.00

theta_1[91] 5140.22 1.17 50.76 5042.27 5106.41 5140.58 5174.64 5239.43 1896 1.00

theta_1[92] 5103.65 1.27 51.50 5002.66 5068.89 5103.13 5138.55 5206.34 1642 1.00

theta_1[93] 5137.62 2.28 52.09 5034.02 5102.68 5137.38 5171.94 5241.49 520 1.01

theta_1[94] 5183.43 1.36 51.93 5083.48 5148.42 5183.05 5218.27 5287.26 1453 1.00

theta_1[95] 5168.56 1.22 50.52 5068.89 5134.58 5169.02 5203.41 5264.58 1705 1.00

theta_1[96] 5142.02 1.63 54.96 5031.46 5106.08 5143.44 5179.29 5249.14 1139 1.00

theta_1[97] 5234.10 1.39 51.94 5132.10 5199.25 5234.15 5268.71 5335.08 1401 1.00

theta_1[98] 5220.93 1.45 51.39 5122.07 5186.33 5220.53 5254.19 5324.22 1261 1.00

theta_1[99] 5281.24 1.71 51.43 5178.93 5246.68 5281.81 5315.64 5380.12 905 1.01

theta_1[100] 5293.70 1.42 51.41 5190.16 5259.04 5294.16 5327.42 5395.58 1306 1.01

theta_1[101] 5244.37 1.17 51.91 5139.57 5210.64 5244.66 5279.08 5346.42 1964 1.00

theta_1[102] 5278.95 1.47 52.49 5175.97 5244.56 5278.73 5314.56 5380.98 1281 1.01

theta_1[103] 5174.92 2.57 53.65 5072.52 5138.35 5174.14 5211.05 5279.11 437 1.01

theta_1[104] 5219.61 2.14 51.68 5121.86 5184.49 5218.31 5252.67 5325.55 583 1.02

theta_1[105] 5357.08 2.14 54.16 5249.28 5321.26 5357.65 5393.92 5461.21 643 1.01

theta_1[106] 5324.66 1.20 51.63 5224.84 5289.45 5324.72 5358.71 5428.41 1864 1.00

theta_1[107] 5296.16 1.23 52.14 5196.07 5262.32 5295.20 5330.81 5400.69 1807 1.00

theta_1[108] 5230.78 1.92 56.21 5118.94 5193.61 5231.71 5268.49 5340.79 855 1.01

theta_1[109] 5323.17 1.44 52.77 5221.36 5287.48 5323.12 5357.59 5427.92 1334 1.00

theta_1[110] 5432.33 2.49 54.08 5323.63 5396.98 5432.32 5468.33 5539.27 470 1.02

theta_1[111] 5387.19 1.37 51.82 5286.68 5352.47 5387.70 5421.85 5488.92 1434 1.00

theta_1[112] 5331.84 1.88 53.13 5229.95 5296.34 5330.70 5366.20 5439.64 795 1.01

theta_1[113] 5425.03 1.52 53.31 5319.55 5390.23 5424.38 5460.65 5531.47 1233 1.00

theta_1[114] 5349.78 1.49 53.57 5245.67 5313.64 5350.34 5386.06 5454.57 1295 1.00

theta_1[115] 5466.24 1.25 51.61 5364.48 5431.59 5465.88 5500.61 5569.56 1703 1.00

theta_1[116] 5583.53 3.77 58.76 5467.77 5544.35 5582.58 5622.27 5697.57 243 1.02

theta_1[117] 5441.18 2.41 52.51 5340.77 5404.59 5441.13 5476.33 5544.94 474 1.02

theta_1[118] 5452.99 2.00 51.87 5351.48 5418.87 5452.33 5487.24 5556.32 671 1.01

theta_1[119] 5506.87 1.35 51.53 5401.91 5473.19 5507.97 5541.81 5607.38 1453 1.00

theta_1[120] 5467.74 1.34 52.21 5366.71 5432.96 5468.08 5502.43 5571.28 1514 1.01

theta_1[121] 5361.04 1.66 54.19 5254.61 5324.27 5361.27 5397.70 5467.21 1069 1.00

theta_1[122] 5383.19 1.35 51.49 5280.53 5349.07 5382.88 5417.04 5484.27 1465 1.00

theta_1[123] 5417.50 1.36 51.52 5314.18 5382.47 5417.99 5452.62 5514.01 1435 1.00

theta_1[124] 5442.21 1.82 51.87 5341.23 5407.25 5442.92 5476.58 5541.96 814 1.01

theta_1[125] 5391.72 1.23 51.97 5291.06 5356.31 5392.40 5426.42 5494.04 1787 1.00

theta_1[126] 5320.06 1.50 55.86 5206.96 5282.96 5320.11 5357.76 5429.06 1379 1.00

theta_1[127] 5407.54 1.37 52.40 5305.82 5372.58 5407.26 5441.46 5512.07 1465 1.00

theta_1[128] 5410.08 1.25 52.17 5307.65 5375.81 5409.73 5444.26 5512.97 1742 1.00

theta_1[129] 5385.92 1.56 52.46 5282.23 5350.80 5385.67 5420.72 5490.47 1124 1.01

theta_1[130] 5404.03 2.42 53.41 5299.11 5368.05 5403.40 5439.28 5511.65 488 1.02

theta_1[131] 5437.00 1.20 51.97 5333.52 5403.23 5436.82 5471.89 5537.79 1889 1.01

theta_1[132] 5496.02 1.11 51.50 5394.51 5462.78 5495.47 5530.17 5598.28 2165 1.00

theta_1[133] 5501.79 1.39 52.09 5398.69 5466.65 5501.85 5537.10 5603.33 1404 1.00

theta_1[134] 5541.19 2.07 54.36 5432.29 5505.98 5542.58 5579.10 5643.70 688 1.01

theta_1[135] 5571.36 1.57 52.00 5466.36 5537.90 5571.59 5606.75 5670.04 1090 1.00

theta_1[136] 5589.30 1.22 51.86 5489.07 5554.61 5588.94 5623.87 5692.32 1802 1.00

theta_1[137] 5588.63 1.26 50.95 5489.34 5553.88 5588.22 5622.94 5690.29 1641 1.00

theta_1[138] 5634.28 1.20 51.66 5532.12 5599.34 5634.17 5670.26 5732.86 1858 1.01

theta_1[139] 5646.12 1.32 51.72 5543.95 5611.94 5645.72 5680.01 5746.20 1534 1.00

theta_1[140] 5611.63 2.27 52.89 5510.91 5575.59 5610.75 5646.30 5717.07 544 1.02

theta_1[141] 5740.11 1.47 53.58 5636.58 5703.94 5739.42 5775.16 5846.59 1334 1.01

theta_1[142] 5805.82 1.64 54.70 5699.90 5768.78 5805.35 5842.11 5913.94 1111 1.00

theta_1[143] 5736.70 4.18 55.69 5631.68 5699.32 5734.83 5772.54 5849.12 178 1.03

theta_1[144] 5844.38 1.26 52.21 5742.08 5809.20 5844.00 5878.75 5948.91 1726 1.00

theta_1[145] 6005.88 4.90 54.62 5894.62 5970.43 6007.19 6042.72 6110.94 124 1.03

theta_1[146] 5962.01 2.00 59.06 5843.63 5922.66 5963.24 6002.63 6073.75 868 1.00

theta_1[147] 6087.51 2.25 53.92 5977.48 6052.31 6088.73 6123.22 6192.19 574 1.02

theta_1[148] 6096.03 1.21 52.83 5990.90 6059.98 6095.88 6131.47 6200.24 1902 1.00

theta_1[149] 6159.89 1.29 52.76 6053.14 6125.12 6159.94 6194.59 6264.11 1666 1.00

theta_1[150] 6165.34 1.14 52.27 6065.10 6129.44 6165.01 6200.55 6267.55 2111 1.00

theta_1[151] 6169.16 1.35 52.38 6065.76 6134.74 6168.72 6202.61 6275.48 1505 1.00

theta_1[152] 6236.05 1.66 53.05 6128.52 6201.78 6236.56 6271.20 6338.55 1022 1.01

theta_1[153] 6270.91 2.29 55.41 6157.37 6235.89 6271.39 6307.88 6377.78 584 1.01

theta_1[154] 6159.67 1.43 53.73 6056.43 6124.08 6158.70 6194.49 6267.07 1404 1.00

theta_1[155] 6082.71 1.18 53.23 5975.78 6047.10 6083.15 6118.36 6184.28 2020 1.00

theta_1[156] 5985.57 2.37 54.09 5881.99 5948.18 5984.11 6021.95 6092.49 520 1.01

theta_1[157] 5907.30 1.52 55.40 5797.66 5870.26 5907.18 5944.59 6016.54 1325 1.01

theta_1[158] 5931.47 1.61 55.86 5820.94 5895.15 5932.18 5969.55 6039.64 1200 1.00

theta_1[159] 5943.40 2.10 58.59 5825.59 5903.88 5943.93 5982.43 6059.87 779 1.01

theta_1[160] 6152.33 3.31 56.67 6040.59 6114.14 6152.46 6191.31 6262.44 294 1.02

theta_1[161] 6169.16 3.46 55.40 6063.38 6132.31 6168.10 6205.17 6280.35 256 1.02

theta_1[162] 6313.29 1.20 53.54 6211.60 6276.04 6313.93 6349.42 6417.35 2004 1.00

theta_1[163] 6451.88 8.10 58.89 6330.93 6413.94 6453.37 6491.97 6561.31 53 1.04

theta_1[164] 6374.96 1.25 52.86 6273.46 6338.90 6374.27 6411.42 6478.32 1791 1.00

theta_1[165] 6372.85 1.25 53.42 6267.58 6337.75 6372.42 6407.71 6480.50 1828 1.00

theta_1[166] 6390.42 1.28 54.18 6283.54 6354.44 6390.41 6427.00 6495.04 1804 1.00

theta_1[167] 6389.40 3.76 55.37 6281.18 6351.64 6389.26 6427.17 6498.15 217 1.02

theta_1[168] 6476.99 1.61 57.09 6367.51 6438.23 6476.86 6515.82 6587.79 1259 1.01

theta_1[169] 6368.94 1.71 60.96 6247.98 6328.97 6369.55 6410.28 6489.29 1268 1.00

theta_1[170] 6357.28 1.83 60.01 6239.10 6317.12 6357.74 6397.51 6474.30 1072 1.00

theta_1[171] 6382.02 1.67 58.69 6265.66 6342.14 6383.25 6421.80 6494.72 1240 1.01

theta_1[172] 6387.83 1.48 59.34 6270.60 6349.01 6388.52 6426.33 6506.10 1601 1.00

theta_1[173] 6560.83 1.93 63.98 6440.39 6517.79 6559.01 6602.46 6691.09 1099 1.01

theta_1[174] 6535.55 1.46 59.69 6417.82 6495.43 6534.97 6575.85 6653.61 1680 1.00

theta_1[175] 6418.12 1.55 60.25 6299.82 6378.77 6418.69 6458.19 6536.35 1504 1.01

theta_1[176] 6490.19 1.67 59.66 6371.19 6450.63 6489.57 6529.83 6609.65 1272 1.00

theta_1[177] 6455.20 1.65 59.19 6337.48 6416.32 6456.02 6494.32 6571.90 1292 1.01

theta_1[178] 6546.10 1.24 57.12 6436.01 6507.71 6545.81 6583.85 6661.77 2112 1.00

theta_1[179] 6699.21 2.25 68.05 6570.05 6652.82 6698.82 6743.59 6835.63 912 1.01

theta_1[180] 6607.28 2.48 70.48 6473.84 6558.83 6606.11 6654.11 6749.21 811 1.01

s[1] -521.12 10.97 72.92 -668.83 -569.71 -518.55 -470.64 -383.31 44 1.06

s[2] -455.10 1.80 60.25 -570.66 -497.12 -455.55 -413.92 -336.99 1119 1.00

s[3] -30.00 1.45 56.55 -139.05 -67.88 -29.68 7.68 83.31 1521 1.00

s[4] -71.71 3.12 57.64 -186.46 -110.30 -71.88 -32.73 40.29 341 1.02

s[5] 398.16 1.25 56.42 287.11 359.75 397.85 435.78 509.36 2042 1.01

s[6] 613.32 1.51 56.48 502.06 575.51 613.17 650.06 724.31 1403 1.00

s[7] 254.01 2.96 58.15 140.96 214.36 254.04 292.93 369.52 385 1.02

s[8] 359.15 3.48 58.75 243.77 320.17 358.90 397.88 473.76 285 1.02

s[9] 174.28 1.52 56.60 65.02 136.48 173.21 212.31 284.62 1379 1.00